Varnish command line tools

Varnish comes with several very useful command line tools that can be a bit hard to get the grasp of. The list below is by no means meant to be exhaustive, but give an introduction to some tools and use cases.

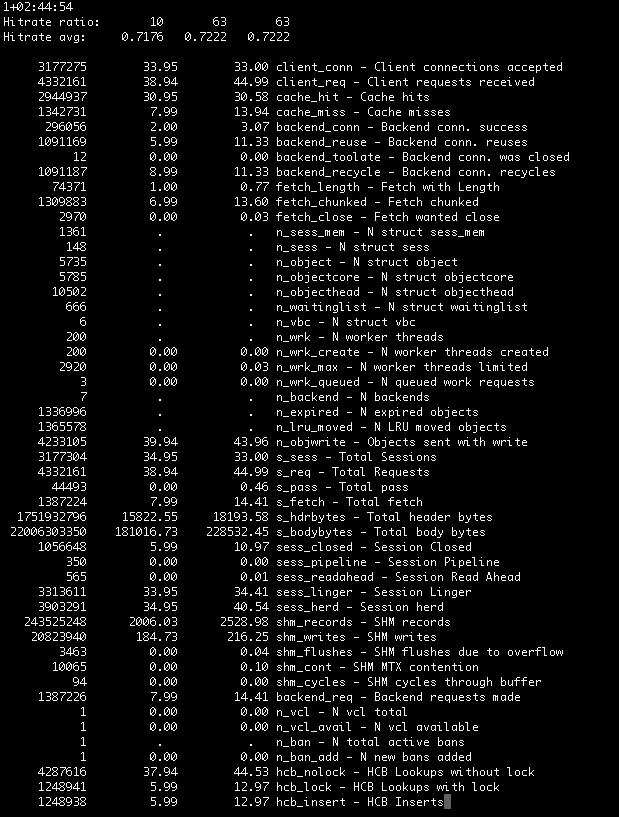

varnishhist

Easily seen as a geeky graph with little information, varnishhist is actually extremely useful to get an overview of the overall status of your backend servers and varnish.

The pipes (|) are requests served from the cache whereas the hash-signs (#) are requests to the backend. The X axis is a logaritmic scale for request times. So the histogram above shows that we have a good amount of cache hits that are served really fast whereas roughly half of the backend requests takes a bit more than 0,1s. Like most of the other command line applications you can filter out the data you need with regex, only show backend requests or only cache hits. See https://www.varnish-cache.org/docs/trunk/reference/varnishhist.html for a complete list of parameters.

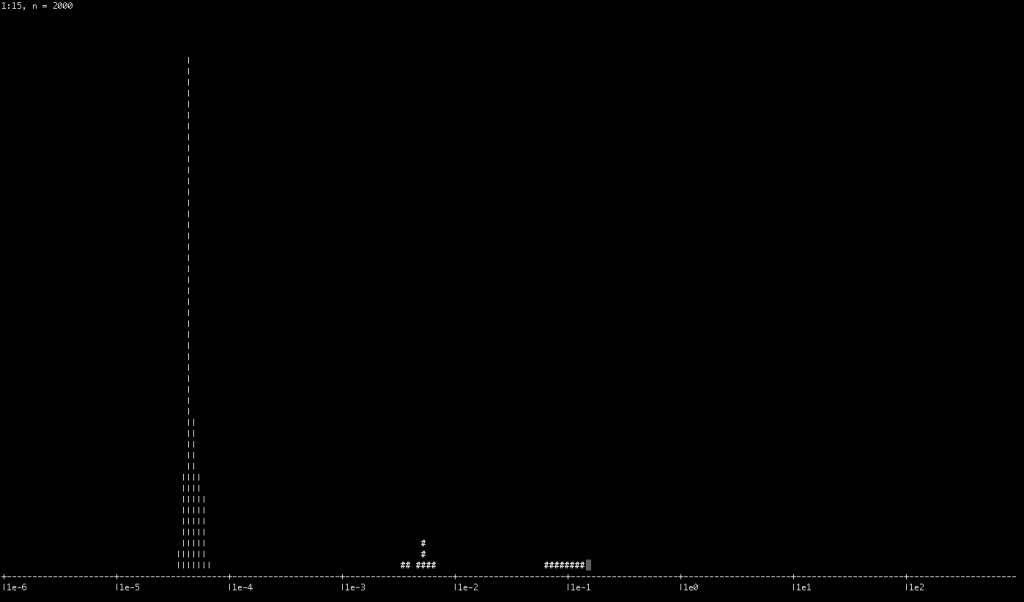

varnishstat

varnishstat can be used in two modes, equally useful. If run with only "varnishstat" you will get a continously updated list of the counters that fit on your screen. If you want all the values for indepth analysis you can however use "varnishstat -1" for the current counter values.

Now a couple of important figures from this image:

- The very first row is the uptime of varnish. This instance had been restarted 1 day and 2h 44 mins before screenshot

- The counters below that are the average hitrate of varnish. The first row is the timeframe and the second is the average hitrate. If varnishstat is kept open for longer the second timeframe will go up to 100 seconds and the third to 1000 seconds

- As for the list of variables below the values correspons to total value, followed by current value and finally the average value. Some rows that are apparently interesting would be

- cache_hit/cache_miss to see if you have monumental miss storms

- Relationship between client_conn and client_req to see if connections are being reused. In this case there's only API traffic where very few connections are kept open. So the almost 1:1 ratio is to be seen as normal.

- Also relationship between s_hdrbytes and s_bodybytes is rather interesting as you can see how much of your bandwith is actually being used by the headers. So if s_hdrbytes are a high percentage of your s_bodybytes you might want to consider if all your headers are actually necesary and useful.

varnishtop

Varnishtop is a very handy tool to get filtered information about your traffic. Especially since alot of high-traffic varnish sites do not have access_logs on their backend servers - this can be of great use.

tx are always requests to backends, whereas rx are requests from clients to varnish. The examples below should clarify what I mean.

Some handy examples to work from:

See what requests are most common to the backend servers.

varnishtop -i txurl

See what useragents are the most common from the clients

varnishtop -i RxHeader -C -I ^User-Agent

See what user agents are commonly accessing the backend servers, compare to the previous one to find clients that are commonly causing misses.

varnishtop -i TxHeader -C -I ^User-Agent

See what cookies values are the most commonly sent to varnish.

varnishtop -i RxHeader -I Cookie

See what hosts are being accessed through varnish. Will of course only give you useful information if there are several hosts behind your varnish instance.

varnishtop -i RxHeader -I '^Host:'

See what accept-charsets are used by clients

varnishtop -i RxHeader -I '^Accept-Charset'

varnishlog

varnishlog is yet another powerful tool to log the requests you want to analyze. It's also very useful without parameters to develop your vcl and see the exact results of your changes in all it's verbosity. See https://www.varnish-cache.org/docs/trunk/tutorial/logging.html for the manual and a few examples. You will find it very similar to varnishstop in it's syntax.

One useful example for listing all details about requests resulting in a 500 status:

varnishlog -b -m "RxStatus:500"

varnishncsa

varnishncsa is a handy tool for producing apache/ncsa formatted logs. This is very useful if you want to log the requests to varnish and analyze them with one of the many availalable log analyzers that reads such logs, for instance awstats.

Send data to include files with dwoo

Sometimes it is wanted to send some data, like title or an id to an included template with Dwoo. Even though described in the manual I did not first look there because I mostly don't find what I look for there. So in case anyone else have the same bother; this will solve it:

{include(file='elements/google-like-button.tpl' url='http://www.eldefors.com')}

This will allow you to use $url in the included template just as if it was a local variable.

More features that one might miss or look too long for in the docs:

Scope $__.var will always fetch 'var' variable from template root. This is useful for loops where scope is changed.

Loop names Adding name="loopname" lets you access it's variables. inside a nested construct by accessing $.foreach.loopname.var (change foreach with your loop element).

Default view for empty foreach By adding a {else} before your end tag you can output data for empty variables passed without an extra element.

What is Dwoo some might wonder. It is a template system with similar syntax to smarty. It has however been rewritten alot and is in my experience working great both performance-wise and feature-wise. It is very easy to extend and the by far most inexpandable feature to me is that of template inheritance. This lacks in most PHP templating systems but with Dwoo you can apply the same thinking as with normal class inheritance. Since many elements on your page are the same on all pages and even more in the same section; you can define blocks which you override (think of it as class methods) and for instance create a general section template that inherits the base layout template and then let every section page template inherit this.

Alternative Android markets

One good thing about the android system is the ability to create your own application markets to cover functions unavailable on the default Android Market or to get an application market onto devices not approved by Google. Since I couldnt find any good lists on the markets available I browsed around my android market mailbox and searched the web a bit to come up with this list:

SlideMe (4/5)

Website: slideme.org

Number of apps: ~4,000

Payment methods: Paypal, credit and debit cards, amazon payments.

Activity: Good. Top app has more than a 150k downloads. Very very low to default market but high for alternatives.

Extras: Developers can get payments to a SlideMe Mastercard. Downloadable native client called "SAM"!

Handster (2/5)

Website: handster.com

Number of apps: ~4,000 (>10,000 for all platforms)

Payment methods: Paypal, credit and debit cards.

Activity: Seems low. Public number of ratings show 0-5 for most popular apps.

Extras: All platforms at one place.

Comments: Offering locales where they seem to have made automatic translations, at best, makes it seem less reliable.

AndAppstore (1/5)

Website: andappstore.com

Number of apps: ~1,800

Payment methods: Only paypal.

Activity: Fairly low. Around 60 comments posted for their native client.

Extras: Native android client.

Comments: Very basic site. Comments without ratings seems to lower overall rating. Complaints about non-delivered apps on their client comments. Seemed promising but in the end didn't deliver any trust.

OpenMarket (-/5)

Comments: Specialized for htc phones and south africa makes it very narrow. didnt catch more interest by web 2.0 catchphrases and unbrowsable categories.

Amazon appstore (2/5)

Website: amazon.com (?)

Activity: Unknown

Comments: Yearly fee for developers of $99 which is 3 times what you pay for your google market account. First year waived. Needed to sell apps on amazon.com. I still don't see the need or what is so special with this market - unless they are coming with their own device.

Poketgear (2/5)

Website: pocketgear.com

Number of apps: ~4,500 in android section

Payment methods: Payapal, credit and debit cards.

Activity: Ok at best. 7,000 downloads of angry birds compared to millions on default client.

Extras: All platforms.

pdassi (3/5)

Website: android.pdassi.com

Number of apps: ~4,500 in android section

Payment methods: Payapal, credit and debit cards.

Activity: ~13,000 downloads on most popular android application. Other sections much more well used it seems.

Extras: Native android client. All platforms. Good localization for german, dutch and italian.

Comments? Did I miss any? Do you know any devices that ship with an alternative android makert?

Free as in promotional trick

MPEG LA delivers the widespread news that H.264 will be royalty free permanently.

This is merely a trick to promote it's adoption in HTML 5 and popularity in the mainstream. Nothing really changes with this statement. H.264 did note become more free in any important aspect.

Firstly it does not change anything for 4 years. The previous license was valid until 2014. We all know that is a long time given current tech progress.

Secondly only free video broadcasting is included. Should you decide any alternative delivery methods, want to actually create videos or anything other it is not included.

This is only making it free to actually transfer the bits that you already have. A limitation that I would argue should even be allowed to exist. And even if you still feel safe - MPEG LAy can change this license at any time.

Microsoft does not love open source

Microsoft now claims to love open source:

http://www.networkworld.com/news/2010/082310-microsoft-open-source.html

There is a very speaking quote from the interview about Ballmer describing Linux as a cancer:

The mistake of equating all open source technology with Linux was "really very early on," Paoli says. "That was really a long time ago," he says. "We understand our mistake."

Reading the original quote it is clear that they still dislike Open Source:

The way the license is written, if you use any open-source software, you have to make the rest of your software open source. If the government wants to put something in the public domain, it should. Linux is not in the public domain. Linux is a cancer that attaches itself in an intellectual property sense to everything it touches. That's the way that the license works.

What they have and had problems with are actually the GPL licenses and how it attributes copyright. This limits Microsofts ability to make use of open source efforts in their propietary software.

What Microsoft should be saying is that they support open source only when they can make use of it in their world of closed source. This is not working WITH open source, it is working AGAINST open source.

Android versions in the wild

Google recently released figures on the version numbers of Android phones accessing the android market. It's just validating what mostly all developers already know. All 1.5+ versions are important to support and different screens are as important.

Users are very fast to let developers know this, though. Already 3 months before the OTA update of Android 2.2 for my Nexus One I got a message about not being able to find an application in the 2.2 market from a user. The error is common, a default manifest file that has limits on API versions. I couldn't have tested the application on a higher API level at the development time so the abscence in the untested OS was not really an error. However it shows how easy it is to get feedback from users to correct such errors.

I have also gotten feedback on enabling the 2.2 install device (SD card or phone memory) as well as automatically generated crash reports that are also new in android 2.2. Adding to that it is for an application exclusively for Sweden where no 2.2 device has even been sold as of yet (but of course imported in different shady ways).

The chart below shows how important it is to keep backwards-compatibility in your applications, just like for web development and old browsers. Luckily the android emulators are very good and theres a fully functioning image for each and every API level. There's also other developer emulator images circling around where you can test the functions missing in the default images, such as a paid-app enabled market.

Source: http://developer.android.com/resources/dashboard/platform-versions.html

Huge server architectures

I enjoy to read about the architecture about some of the bigger internet related systems around. Be it about database sharding, Hadoop usage, choice of languages or development methods. I will continuously try to post some numbers from these adventures. Here is a start together with links that can be followed for more details.

From Data Center Knowledge quoting a talk at Structure 2010 by Facebook’s Jonathan Heiliger.

- 400 million users.

- 16 billion minutes spend on Facebook each day.

- 3 billion new photos per month

- More than a million photos viewed per second.

- Probably over 60,000 servers at date (Data Center Knowledge)

- Thousands of memcache servers. Most likely the biggest memcache cluster (Pingdom).

Akamai

- 65,000 servers spanning 70 countries and 1000 networks.

- Hundreds of billions "Internet interactions" per day

- Traffic peaks at 2 Terabits per second

Source: Akamai

- Server number estimated at 450,000 at 2006 (High Scalability)

- Server number estimated at 1 million by Gartner (Pandia)

Why Swedish web TV isn’t as big as it could and should be

Swedish TV channels has well over a million weekly users watching full episodes on their web TV players. A big majority of it is the ad free SVT (state television) that is funded by tax payers. The rest is scattered amongst the 3 big commercial actors (TV4, Prosieben with kanal 5/9 and MTG with TV3/6).

With a somewhat unstable connection it is very easy to see some very easily fixed ways that they drive away their viewers.

Doubling the ads

All of the ad supported channels effectively throws ads at you. Firstly when you start the clip and later when you pass the ad spots in the video clip. However, they do not handle it any differently should you want to fast forward into minute 20 where you stopped watching the TV, or where you´re stream disconnected last try. This means you first have to wait for the introductory ad for a good bit, take a second to fast forward and then have to wait for a minute more for the in video ads that you fast forwarded past.

This nuisance is sure to scare away a lot people with disconnecting connections or those seeing short parts of the videos. It is incredibly easily fixed by putting the second ad spot after 10 minutes of watching the video instead of 10 minutes into the clip. It takes a technology change at a level where the channel itself has no direct control, though.

Unreliable fast forwarding

If you decide to do real streaming instead of pseudo-streaming, then at least make sure that the one feature the user will notice actually works.

Supporting slow connections

Be it an unreliable 3G connection or a foreign connection - a lot of people are not using stable 2+mbps connections and still want to watch the videos. There are two easy ways to support them - either you make it a pseudo-stream where you can preload the entire movie (see Youtube) or you also have available a lower bitrate video that is actually getting loaded instead of leading users into buffering land. None of the Swedish web TV stations does this anywhere close to good.

Ad bitrate

If your users can view the video - they should also be able to view the ads without buffering. Ad bitrate has to be the same, or lower, than the normal video playback to stop this.

Ubiquitous error reports

A lot of web TV players wants to make the users known when there is an error so they will know it is not the best you can get. However many of the players have so much false positives that it is soon enough getting normal to now and then get a semi-transparent layer on your movie saying there is an error with the video playback. However the only problem with the video is the error message covering it!

All of these problems together can create many very annoying experiences especially to new web TV users or new Internet users that are easily scared away. The problem is however that the competence in the areas is most often nowhere to be found in the broadcasting companies. Instead they have outsourced every part of the video processing and delivery - sometimes to several different parties. The route to only fix one of the problems may be very long this way and may even mean having to chose a different provider.

Why I still don’t want to like Apple products

Apple really continues to show they want to lock in and control even their potential users. Constantly I see new reasons why Apple are working against open standards, freedom in development and freedom of choice. Some recent examples:

HTML 5 Showcase

Stupid! browser lock-in. Not even the index page that does not showcase any HTML5 at all is accessible in any other browser than Safari. A warning, maybe even as a modal dialog, about browsers and their support could be convenient - but to first block all browsers and then talk about the open web standards that are the future of the web gets, in lack of better wordings, silly. Now where Apple in general with Safari and their website works against open standards and freedom one must admit that their open source decision on the webkit rendering engine is a step in the other direction. But to me everything points to this being a strategic decision to have the a good, stable and fast development of the rendering engine that is just more and more important in their other proprietary systems.

WWDC for (almost) everyone

In 2010 you do not need to require registration as well as require usage of a proprietary download manager (iTunes) to be able to distribute video content. I was going to look at some video presentations of the conference but when realizing I had to download apple software where I need to be very careful to unclick 5 items to not get extra shortcuts, update managers or codecs I do not want - I turned around. Obviously this becomes even more bizarre as they showcase the <video> element in the HTML 5 demos.

Good examples in how you make conference content available rather than promote lock-in:

Google I/O 2010

FOSDEM

The flash opinion

Whereas I want open standards to as high degree as possible there is one thing that I feel is more important and that is CHOICE. The choice of the developer and the choice of the user. There is one thing you should do to control this choice and that is give information. What Apple decides to do with Flash is choosing themselves for both the user and the developer.

With the last version of the Android mobile operating system it is for instance possible to deploy Adobe Air applications on the android cellphones. This gives a lot of more ready applications, future potential developers and saves huge amount of time in converting flash applications to the native languages of the platforms. Apple argues that it is Adobes intention not to help developers with creating the best application for iPad/iPod/iPhone but to create the best cross-platform application. They have reason to dislike this as they have gotten such a good amount of dedicated iPhone developers - but I hope times change so that users and developers gets to do the choice. A good rating system lets users decide what apps are good - no matter of the code or framework it was written with. An open system lets others be part of the innovation - both with open and proprietary technologies. Let developers know the pros and cons of different development methods and work towards giving them as much choice as possible. Let users get the information about possible problems with some frameworks, size of downloads and what not - then let them chose and rate depending on their own experience. Users are the best user guides.

A first glance at hiphop

Facebook recently released their PHP on steroids named HipHop as open source. I listened to their presentation a while before at the FOSDEM conference in Brussels and was as many others impressed - but not as entusiastic as many others seem now.

Some say it's nothing new because there has been a small amount of PHP compilers before or because there are op-code caches already. What HipHop does however is not only to compile the code base to C++ but also process the code in several stages - to use as specific data type possible for instance. Facebook engineers are saying that they see 30-50% performance improvement over PHP that is already boosted by APC. Indeed that is a huge deal given the amount of application servers they use.

On the other side a lot of attention it has been gotten is almost the same as that of APC. It´s seen as a general purpose performance booster. But as with APC results for most people will be disappointing for the reason that most of the application time is not spent in the PHP code with most websites.

Facebook is indeed special compared to most websites. For instance they generally do no joins of data at the database level. That results in alot more data in the application as well as more basic application logic.

The reason they often chose to totally exclude joins are several. Amongst others it´s performance draining for the database servers which are generally harder to scale. It´s also very hard to do when you need to query a whole lot of servers (that can also be sharded by different factors) for each and every type of data you want to join.

HipHop is made for the giants by a giant. The huge sites with a lot of traffic that have big amounts of data to do queries against. Smaller sites will have much less benefit from the performance boost of it as most of their time is spent in databases, caches, reading from disk etc. The results will also vary greatly depending on how much of the code base of the website is mundane (basic constructs like loops, processing with non-dynamic variables etc). Basically everything that can be rewritten with static functions and variables are the most welcome targets to the HipHop optimizations.

Even with some 10 commodity application servers I would say that Hip Hop should give little enough performance boost to justify the time that needs to be spent to learn, test and maintain the framework. For Facebook though, I can certainly see how it's very welcome even with several man-years of development costs as they have thousands (?) of application servers and have good reasons not to drop PHP in most heavy parts of it.