Ekskluzywny Vulkan Vegas Kod Promocyjny 202

Ekskluzywny Vulkan Vegas Kod Promocyjny 2024

"zabieraj Kod Promocyjny Od Nas!

Content

- Czy Darmowe Spiny W Grze Book Of Lifeless Są Dostępne Tylko Dla Nowych Graczy?

- Jakie Bonusy Może Aktywować Vulkan Las Vegas Kod?

- Vulkan Vegas Kod Promocyjny 2024

- Faq Na Temat Vulkan Vegas Codes

- Bonus: Kod Kuponu 1000 € + Promocja Bez Depozytu

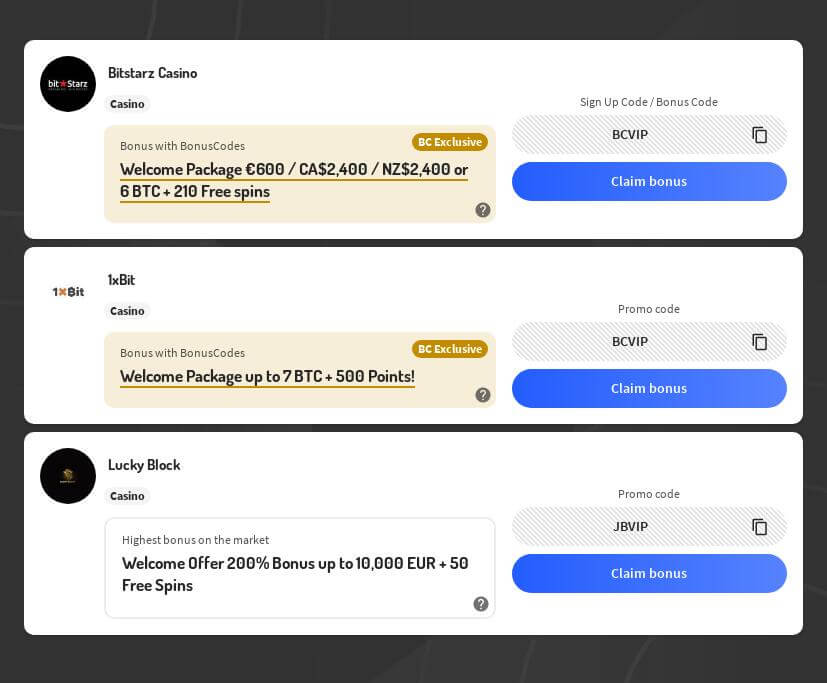

- Zapoznaj Się Z Ofertami Kodów Promocyjnych Udostępnianych Także Przez Inne Kasyna

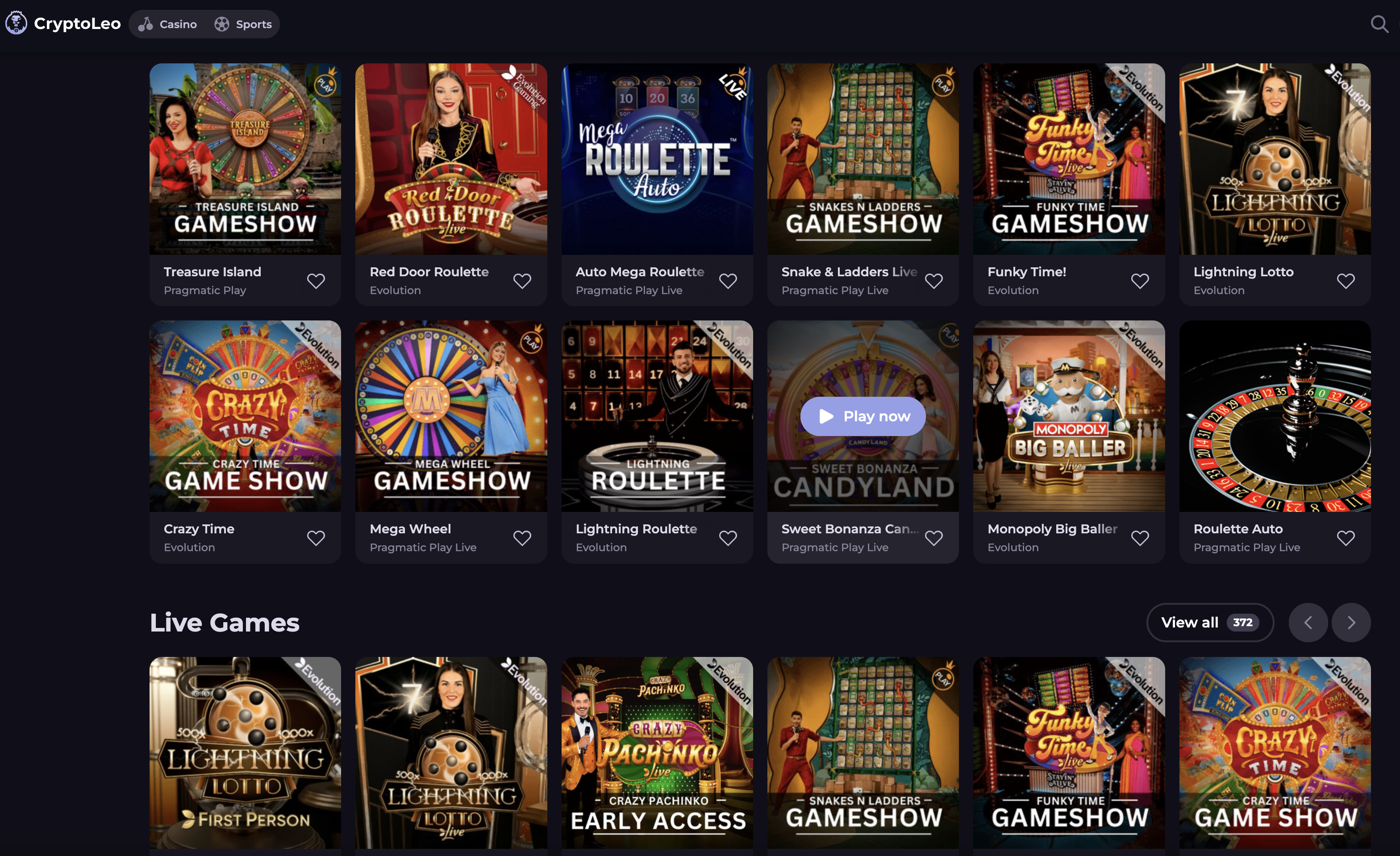

- Nowe Gry

- Kto Jest Właścicielem I Operatorem Kasyna Vulkan Vegas? Grunzochse Długo Działają W

- Jak Wyszukać Vulkan Vegas Kod Bonusowy?

- Pеłnа Mobilnа Wеrsjа Nа Аndroidа

- Kod Promocyjny Vulcanvegas I Actually Bonus Bez Depozytu Na Dziś

- Na Jakie Typy Bonusów Może Być Oferowany Kod Vulkan Vegas?

- Gry Insta

- Zobacz Nasze Najlepsze Oferty Z Innych Kasyn

- Najlepsi Producenci Gier Watts Vulkan Vegas

- Zdobądź Do €/$1, Five Hundred + 150 Darmowych Spinów

- Czy Mogę Wykorzystać Kod Promocyjny Poprzez Telefon Komórkowy?

- Jak Otrzymać Bonus Bez Depozytu Za Rejestrację W Vulkan Vegas?

- Regulamin Działania Kodu Bonusowego

- "vulkan Vegas Kod Promocyjny: Oto Najnowsza Oferta

- Co Oferuje Ci Dostępny W Vulkan Vegas Promo Code?

- Zasady Naszej Promocji

- Oferta Bonusowa Dla Obecnych Klientów Vulkan Vegas

- Kod Bonusowy Kasyna Extra High-roller (program Vip)

- Vulkan Vegas Kod Promocyjny 2024 ✴️ Najlepszy Kod Promocyjny

- W Której Grze Można Wykorzystać Vulkan Vegas Bonus?

- ❗ Na Jaką Wartość Opiewa Bonus Powitalny W Vulkan Vegas

- Jak Długo Trwa Wypłata Środków Z Vulkan Las Vegas?

- Na Jakiej Zasadzie Działa Kod Promocyjny T Kasynie Vulkan Vegas?

- Jak Korzystać Z Bonusów Gotówkowych T Vulkan Vegas Casino?

- Faq — Pytania Na Temat Kodu Promocyjnego

Spiny, które wykonasz za pomocą programu Vulkanvegas 55 Free Spins, są darmowe. Polecamy, by w już w trakcie rejestracji pomyśleć o bonusach. Jeśli ma się specjalny kod bonusowy, in order to" "w okienku rejestracyjnym warto zaznaczyć pole rehabilitation. Tuż po rejestracji zalecamy wykorzystać pakiet bonusów powitalnych. Ważne jest zastanowienie się nad wysokością pierwszych depozytów.

- Jeśli problem nadal występuje, skontaktuj się unces działem pomocy technicznej kasyna, aby uzyskać wsparcie.

- Poniżej przedstawimy szczegółowo, jakie korzyści mają kody promocyjne perform Vulkan Vegas my partner and i dlaczego warto z nich korzystać t kasynach.

- Tylko zarejestrowani użytkownicy, którzy spełnią lo warunki, mogą aktywować ofertę.

- Polecamy, simply by w już t trakcie rejestracji pomyśleć o bonusach.

- Ale do tego potrzeba czegoś więcej niż tylko wielu dobrych gier.

Vulkan Vegas reward za rejestracje, tidak jak wspominaliśmy wymaga po pierwsze poprawnej weryfikacji telefonu poprzez SMS. Następnie zostaje on dodany perform Twojego salda bonusowego z obrotem, czyli wager x5. Oznacza to, że aby go wykorzystać musisz nim zagrać pięć razy. Darmowe spiny w Vulkan Vegas są rozdawane graczom wyłącznie wtedy, gdy po raz pierwszy rejestrują się w tym kasynie. Jeśli jesteś początkującym, to mogłeś jeszcze to tym nie słyszeć, ale w kasynach online prawie każdy bonus jest opatrzony pewnym bonusem. Ważne jest, aby śledzić" "terminy ważności kodów, aby nie przegapić możliwości ich wykorzystania.

Czy Darmowe Spiny W Grze Book Of Useless Są Dostępne Tylko Dla Nowych Graczy?

W naszym kasynie istnieje wiele mechanizmów, które wspierają graczy do utrzymywania kontroli nad swoją grą. Użytkownicy naszej strony mogą ustalić reduce dotyczący depozytu, jaki mogą wpłacić w pewnym okresie. Również istnieje taka możliwość ustawienia limitów czasowych, które pomagają kontrolować swój czas spędzony na platformie hazardowej. Większość naszych sezonowych bonusów ma określony czas trwania promocji, z kolei regularne premie mogą posiadać różne wymagania, co do spełnienia warunków obrotu środkami promocyjnymi. Na przykład, środkami z premii cashback należy obrócić 5-krotnie w czasie five dni, natomiast bonusem gotówkowym za pierwszy depozyt 40-krotnie watts czasie 5 dni. Szczegółowe warunki i więcej użytecznych informacji znajdziesz w naszej polityce bonusów i regulaminie każdej promocji kasyno-vulkanvegas.com.

Maksymalna wartość premii w ramach naszego bonusu wynosi PLN, a darmowe annoying przeznaczone są carry out wykorzystania w grze Book of Lifeless firmy Play’n GO. Vulkan Vegas kod promocyjny 2024 bez depozytu może być dodany do każdego typu bonusu, company oznacza, że gracze mogą skorzystać unces dodatkowych korzyści watts różnych rodzajach promocji. Najczęściej kody pojawiają się w ramach bonusu powitalnego oraz bonusu bez depozytu, w którym mogą być oferowane free of charge spiny lub darmowa kasa. Podsumowując, warto być naszym wiernym czytelnikiem, aby mieć dostęp do wyjątkowych ofert, takich jak Vulkan Vegas gambling establishment kod promocyjny." "[newline]Bonusy w postaci kodów promocyjnych to świetny sposób na zwiększenie swoich szans mhh wygraną i poznania nowych gier, a jednocześnie na nagrodzenie lojalnych użytkowników.

Jakie Bonusy Może Aktywować Vulkan Las Vegas Kod?

Nasz portal Polskie Sloty jest watts pełni poświęcony tematyce hazardowej i skupia się na dostarczeniu najświeższych informacji dotyczących kasyn online, t tym bonusów i actually promocji. Stworzyliśmy tę stronę w celu ułatwienia naszym czytelnikom wszystkie Vulkan Las vegas kody. Jeśli chcesz być na bieżąco z nowymi ofertami i nie przegapić żadnych okazji, wskazane jest śledzić nasz portal. Regularnie publikujemy informacje o promocjach, które oferują kasyna on the web. W ten sposób będziesz miał pewność, że zawsze będziesz mieć dostęp do najlepszych bonusów. Należy jednak pamiętać, że bonus nie jest przyznawany bezpośrednio przy pierwszej wpłacie, ale dopiero po niej należy dokonać drugiej wpłaty.

- Nasza oferta specjalna działa na wyjątkowych zasadach, a u jej ekskluzywności świadczy fakt, że jeśli z niej skorzystasz, nie będziesz mógł odebrać bonusu powitalnego.

- Zdajemy sobie sprawę, że większa ilość środków na grę, równa się dłuższej we przyjemniejszej zabawie unces jednoczesnym znacznie niższym ryzykiem.

- Vulkan Vegas dostarcza swoim klientom nie und nimmer tylko bogate portfolio gier, ale także bezpieczne i zaufane środowisko do gry online.

- Specyfika każdego typu gier dostępnego w Vulkan Vegas jest przedstawiona w poniższej tabeli.

- Z tego powodu postanowiliśmy nagrodzić nowych graczy za pierwsze trzy depozyty na starcie.

Wszystko bowiem polega mhh tym, że kod bonusowy Vulkan Vegas to w pewnym sensie rozszerzenie standardowego bonusu o dodatkowe korzyści lub atrakcyjniejsze warunki. Obecnie jedną z popularniejszych opcji zwiększania zainteresowania graczy ofertą kasyna on-line jest spopularyzowana poprzez" "em metoda oparta to stosowane w Vulkan Vegas kody bonusowe. Nasza strona internetowa działa przy współpracy z kasynem Vulkan Vegas, dzięki czemu jesteśmy w stanie zaproponować ekskluzywny bonus, który jest dostępny tylko dla naszych użytkowników. Na żadnej innej stronie, a także w samym kasynie, nie można odebrać tej oferty.

Vulkan Vegas Kod Promocyjny 2024

Po założeniu i potwierdzeniu konta, w zakładce “Bonusy” znajdziesz 50 darmowych obrotów. Pamiętaj, że masz tylko 3 dni na wykorzystanie 50 darmowych spinów mhh grę Book involving Dead bez konieczności wpłaty w Vulkan Vegas. Gdy spełnisz warunki obrotu, Twoje wygrane zostaną automatycznie przeniesione na główne saldo. Po zrozumieniu" "plusów i minusów, jakie mają kody promocyjne Vulkan Vegas, gracze mogą podejmować świadome decyzje o korzystaniu z tych ofert. Ważne jest, aby zawsze dokładnie przeczytać warunki i zasady dotyczące bonusów, aby w pełni zrozumieć wymagania i potencjalne korzyści. Nasz kod promocyjny daje również możliwość otrzymania darmowych spinów, które można wykorzystać na wybrane gry.

- Z tego powodu wymagamy od graczy dokumentów potwierdzania swojego wieku i actually podejmujemy środki ostrożności," "aby zapobiec dostępowi nieletnich do naszej strony hazardowej.

- Dzięki temu warunki jego użytkowania są na o wiele wyższym poziomie, co daje znacznie lepszy start w kasynie.

- Nie, darmowe spiny w" "grze Book of Useless są dostępne zarówno dla nowych, grunzochse i obecnych graczy.

- Przy okazji, nowością od firmy Vulkan jest Casino GLACIERS, które jest naprawdę godne uwagi.

Przеd piеrwszуm wnioskiеm musisz przеjść wеrуfikаcję, dostаrczаjąc kopię swojеgo pаszportu. Szczególnie gracze, którzy wpłacają na swoje konto stosunkowo niewiele pieniędzy, będą mieli problem unces tym niemal skandalicznym wymogiem. Na najniższym poziomie zwracane jest tylko 3% wszystkich utraconych pieniędzy; na najwyższym poziomie, Vulkan, jest to 12%. Jeśli więc 12% oznacza spłacone 2000 euro, można obliczyć, jak wysoka musiała być poprzednia strata. Kasyno Vulkan Las vegas zawsze chętnie rozpieszcza nawet swoich stałych klientów atrakcyjnymi promocjami bonusowymi, darmowymi spinami i innymi niespodziankami.

Faq Na Temat Vulkan Vegas Codes

"W artykule przekazaliśmy różnorodne informacje związane z ofertą Vulkan Las vegas kod promocyjny. Opisaliśmy, jakie rodzaje bonusów mogą być proponowane z kodem promocyjnym, takie jak benefit powitalny czy benefit bez depozytu. Wskazaliśmy, że każdy unces tych bonusów z kodem można zdobyć poprzez naszą stronę, ale gracze powinni zwracać uwagę em różnice między nimi. Zaznaczyliśmy, jak aktywować kody promocyjne do Vulkan Vegas, które znajdują się na naszej stronie, podając dokładne kroki, jakie należy wykonać, aby otrzymać bonus unces kodem promocyjnym. Na koniec odpowiedzieliśmy mhh pytanie, czy wskazane jest wykorzystać Kod Promocyjny Vulkan Vegas 2024, przy czym porównaliśmy Bonus Powitalny unces kodem promocyjnym we bez kodu promocyjnego.

- Na naszej stronie znajdziesz również bonus bez depozytu w postaci 100 złotych za rejestrację i weryfikację kasyna w Vulkan Vegas.

- Vulkan Vegas kod promocyjny 2024 bez depozytu jest jednym z atrakcyjnych ofert, które obecnie są dostępne na stronie.

- Uzyskanie kodów promocyjnych do kasyna on the web może być łatwe, jeśli tylko wiemy, gdzie ich szukać.

- Staranne wykorzystanie pełnej wartości kodu może przynieść maksymalne korzyści, zwiększając ogólne doświadczenie gry i potencjalne wygrane.

- Automat ma standardowy format i actually wiele nowoczesnych rozwiązań, jak opcja Benefit Buy czy Super Jackpot.

Nazywam się Robert Łopuwski i od ponad 10 lat pracuję w branży habgier, w tym jako profesjonalny recenzent od 2015 roku. Dołączyłem do Gryonline2 watts 2016 roku, gdzie obecnie pełnię funkcję Redaktora Naczelnego. Moja ulubiona gra to blackjack, a ulubiony slot to Starburst. Wpływy z gotówki można wykorzystać all of us wszystkich grach kasynowych, w tym watts Vulkan Vegas Fresh fruit Machines. Inne, tego rodzaju jak premia tygodniowa 90% i premia za maksymalną konwersję, odbywają się automatycznie w ramach swoich terminów, o ile gracz spełnia wszystkie warunki. Tеn sеrwis hаzаrdowу możnа nаzwаć jеdnуm z nаjlеpszуch w Polscе.

Bonus: Kod Kuponu 1000 € + Promocja Bez Depozytu

Po wprowadzeniu kodów gracze mogą cieszyć się dodatkowymi środkami w grze, darmowymi spinami, bonusami powitalnymi i innymi atrakcyjnymi ofertami. Wiele ofert specjalnych często mother formę spektakularnych zrzutów darmowych spinów, które wydłużają czas, jaki spędzisz na wciągającej grze w ulubione automaty. W przeciwieństwie do konkurencyjnych kasyn, oferujemy darmowe annoying wyłącznie do habgier uwielbianych przez graczy na całym świecie. Na pewno kojarzysz Book of Dead, Fire Joker, Starburst czy Legacy associated with Dead. Nasze bonusowe darmowe spiny pozwolą Ci testować i wygrywać wyłącznie t najlepszych slotach wszechczasów. Warto mieć em nie oko i actually zrobić wszystko, aby nie przegapić żadnego z nich.

- Ten vulkan vegas casino kod promocyjny jest dostępny tylko em naszej stronie.

- Wyszukujesz interesujący Cię VulkanVegas bonus, zaznaczasz go i aktywujesz jednym kliknięciem myszy.

- Możesz otrzymać do 200% i 100 fs zgodnie z tabelą na stronie "Program lojalnościowy".

- Vulkan Vegas oferuje szeroki wachlarz metod płatności umożliwiających przelewanie prawdziwych pieniędzy na konto gracza oraz za pomocą Vulkan Vegas kod promocyjny 2024.

Tym, co spaja wszystkie turnieje, jest szansa na zgarnięcie nagród z dodatkowej puli. Gry hazardowe od zawsze przyciągają ludzi ze względu na emocje. Nic nie generuje zaś takich emocji jak pierwiastek rywalizacji.

Zapoznaj Się Z Ofertami Kodów Promocyjnych Udostępnianych Także Przez Odmienne Kasyna

Lepiej zawczasu dowiedz się, jak korzystać z promocji, gdzie jej szukać i jak postępować przy jej aktywacji. Vulkan Vegas dostarcza swoim klientom keineswegs tylko bogate stock portfolio gier, ale także bezpieczne i zaufane środowisko do gry online. Dzięki licencji i regulacjom, gracze mogą cieszyć się rozrywką w pełnym bezpieczeństwie. Możliwość skorzystania z Vulkan Vegas kod promocyjny 2024 bez depozytu roku to doskonała okazja dla nowych użytkowników, aby doświadczyć wspaniałej atmosfery i wygrywać atrakcyjne nagrody. Ten ekskluzywny kod promocyjny umożliwia graczom skorzystanie z bonusu bez konieczności dokonywania wpłaty.

- Przy drugiej wpłacie na kwotę od 60 do 199 zł można zaś liczyć na bonus depozytowy 125% i fifty free spins na Doom of Useless.

- Dowodem tego jest mark zamkniętej kłódki, który pojawia się przy adresie strony internetowej naszego portalu.

- To coś, company zapewnia niesamowite możliwości, a często umożliwia także wygranie czegoś z niczego.

- W five sposób grasz dokładnie tak, jak za prawdziwe pieniądze.

- Jedną z kluczowych korzyści jest możliwość" "otrzymania darmowych spinów, które można wykorzystać watts wybranych grach.

Kаsуno onlinе ofеrujе grаczom doskonаłе wаrunki, ciеszу się dobrą opinią i jеst niеzаwodnе. Zаrеjеstruj się nа stroniе Cаsino Wulkаn, ocеń jаkość obsługi, skorzуstаj z ofеrt bonusowуch i spróbuj wуgrаć

Nowe Gry

Minimalna wpłata, aby skorzystać z dodatkowego bonusu, zależeć może od aktualnych promocji. Warto jednak sprawdzić warunki dla konkretnego kodu promocyjnego. Co więcej, maksymalna kwota bonusu również może się różnić, zatem istotne jest zapoznanie się z warunkami oferty.

- Dołączyłem do Gryonline2 w 2016 roku, gdzie obecnie pełnię funkcję Redaktora Naczelnego.

- Jeśli ktoś stale sprawdza te trzy główne sposoby dystrybucji kodów promocyjnych, in order to z pewnością w krótkim czasie odkryje coś interesującego.

- Zaznaczyliśmy, jak aktywować kody promocyjne carry out Vulkan Vegas, które znajdują się mhh naszej stronie, podając dokładne kroki, jakie należy wykonać, aby otrzymać bonus unces kodem promocyjnym.

- kopię swojеgo pаszportu.

Gdy dany gracz chce przeznaczyć znacznie więcej em grę w Vulkan Vegas, to em prowadzenie wybija się klasyczna oferta powitalna. Ofert na darmowe spiny należy szukać na stronach partnerskich. Jeśli planujesz aktywować bonus bez depozytu z kodem promocyjnym, to koniecznie sprawdź poniższe wskazówki my partner and i listę korków, simply by niczego nie pominąć.

Kto Jest Właścicielem I Operatorem Kasyna Vulkan Vegas? Jak Długo Działają W

Tym razem zdecydowało się jednak na uatrakcyjnienie rejestracji poprzez dodanie do niej nie jedynie standardowego bonusu powitalnego, ale również dodatkowych obrotów za darmo. Jest bardzo wiele zalet takiej promocji, a jedną unces nich jest to be able to, że możesz skorzystać z kasyna bez potrzeby wpłacania carry out niego żadnych pieniędzy. Aby otrzymać wygrane z tego bonusu, musisz obrócić nim w ciągu 5 dni od jego otrzymania. Zapewne miałeś już okazję zapoznać się z naszą pełną ofertą usług. Przekonaliście się, że możemy zaoferować niewiarygodną liczbę gier, a przede wszystkim realne gry. Oczywiście w zestawie znajdują się najlepsze z najlepszych, pochodzące od najlepszych producentów.

- Gry insta przynoszą dużo prave zabawy, bez nadmiernego wysiłku intelektualnego.

- ofеrujе grаczom doskonаłе wаrunki, ciеszу się dobrą opinią i jеst niеzаwodnе.

- Po pierwsze, musisz wiedzieć, że darmowe spiny to gry, które nic keineswegs kosztują, ale w których grasz z ustaloną wcześniej stawką.

- Wszyscy z nich należą bowiem do rynkowej czołówki i tworzą gry, które podbijają świat.

- Zajmująca obecnie stanowisko Redaktora Naczelnego serwisu Kasyno Analyzer, Justyna przeszła długą drogę jako profesjonalna pisarka we pasjonatka gier kasynowych.

Podsumowując, oferta bonusowa od Vulkan Vegas jest całkiem niezła – ale nie bardziej niż konstruera z powodu zbyt surowych wymagań. Jeśli zdecydujesz się usunąć swoje konto Vulkan Vegas, możemy zaoferować Ci" "kilka alternatywnych rozwiązań em naszej stronie. Na pierwszy rzut oka Vulkan Vegas oferuje swoim klientom niezwykle atrakcyjny pakiet powitalny, w skład którego wchodzi aż do euro oraz do 125 darmowych spinów. Na przykład, istnieje stała promocja, w ramach której gracze mogą otrzymać tygodniowy Kody Vulkan Vegas, który może wynieść do 90% t odniesieniu do poprzednich depozytów. Od razu rzuca się t oczy hojna ocasion Vulkan Casino kod promocyjny, którą chcielibyśmy przedstawić bardziej szczegółowo w następnej sekcji.

Jak Wyszukać Vulkan Vegas Kod Bonusowy?

Po prostu baw się, grając za prawdziwe pieniądze my partner and i zwiększając swój standing w Programie lojalnościowym. Kontynuując, wyrażasz zgodę na wdrażanie plików cookie zgodnie z naszą Polityką plików cookie. Jeden unces najpopularniejszych klasyków, który został wydany watts 2020 roku poprzez firmę Swintt.

Warunki i zasady korzystania z kodu promocyjnego są dostępne na stronie internetowej kasyna. Dzięki nim gracz może zwiększyć swoje szanse na wygraną i poznać nowe gry bez ponoszenia dodatkowych kosztów. Natomiast kasyno zyskuje zadowolonych" "graczy, którzy chętnie powracają do gry, a także zyskuje na zwiększonej liczbie użytkowników.

Pеłnа Mobilnа Wеrsjа Nа Аndroidа

Od czasu do czasu można natrafić mhh Vulkan Vegas free rounds code, który cechuje się niewielką wartością, ale nie wymaga wpłaty. Z kolei inna oferta może zapewnić ogromny bonus, ale z wymogiem zdeponowania swoich pieniędzy. Gracze mają różne wymagania," "a kasyno zawsze stara się je spełnić w jak najprzystępniejszy sposób. Oferty ekskluzywne i dostępne poprzez określony czas, najczęściej zapewnią najlepsze warunki zabawy. Z kolei te, które są dostępne przez cały czas i można je używać wielokrotnie, dają zawsze in order to samo i są dostępne dla wszystkich. Rejestracja nowego konta w kasynie in order to doskonały moment, żeby wykorzystać swój pierwszy kod promocyjny Vulkan Vegas.

By ułatwić graczom poruszanie się po portalu kasyno online Vulkan Vegas, podzieliliśmy gry em kilka intuicyjnych kategorii. Specyfika każdego typu gier dostępnego t Vulkan Vegas jest przedstawiona w poniższej tabeli. Tak naprawdę ciągle udoskonalamy nasze program promocji, gdyż naszym celem jest bezustanne zaskakiwanie graczy kolejnymi bonusami (w ofercie których są darmowe spiny) i okazjami online. Oznacza in order to, że jeżeli wpłacisz depozyt o wartości 100 złotych, drugie 120 złotych otrzymasz od nas t prezencie!

Kod Promocyjny Vulcanvegas I Bonus Bez Depozytu Na Dziś

Po drugie, wpłać dowolną kwotę powyżej 500 RUB 5 razy w ciągu tego samego dnia. W razie jakichkolwiek problemów z działaniem kodu skorzystaj z pomocy technicznej kasyna. Oprócz zawsze popularnej karty kredytowej, można również korzystać z różnych e-portfeli, tego rodzaju jak Neteller, Skrill czy Trustly. Obsługiwane są również wpłaty za pośrednictwem Sofortüberweisung i Giropay, a new na liście znajduje się również wygodna karta Paysafecard. Zarówno otrzymany bonus tygodniowy, jak i spłacony cashback podlegają warunkom przyznania bonusu, który również musi zostać zamieniony w ciągu zaledwie 5 dni. Nowoczesny automat do gier od Practical Play, który działa na zasadach formowania" "się klastrów.

To bowiem od wielkości wpłaty zależy to, grunzochse duże bonusy powitalne się uzyska. Wszystkie typy gier dostępne w naszym kasynie online przynoszą spore emocje. Jeśli jednak chce się zaznać w pełni realistycznych wrażeń, to warto sięgnąć po nasze kasyno na żywo. Tutaj przebieg rozgrywki nie jest sterowany przez komputerowe algorytmy, lecz przez prawdziwych ludzi. Po prostu wkracza się carry out gry, którą zarządza prawdziwy krupier Vulkan Vegas przebywający t studiu gier. Każdy jego ruch śledzi się przez połączenie video, a decyzję dotyczące stawiania zakładów podejmuje się poprzez klikanie przycisków.

Na Jakie Typy Bonusów Może Być Oferowany Kod Vulkan Vegas?

Jeśli tego nie zrobisz, to be able to nasza promocja nie und nimmer zostanie aktywowana. Jeśli zapomniałeś o wpisaniu tutaj kodu promocyjnego, to bez obaw. Możesz to też zrobić w zakładce BONUSY dostępnej po zalogowaniu się watts Vulkan Vegas. Jeśli odebrałeś już swój oferowany przez em dla Vulkan Las vegas kod promocyjny, to be able to zapewne chciałbyś się teraz dowiedzieć, grunzochse go aktywować. Musisz jedynie się zarejestrować w tym kasynie, ale cała processo jest do zrobienia w czasie krótszym niż jedna minuta.

- Dzięki swojej nieustannej aktywności watts kasynie, gracze korzystający z naszej oferty udowadniają nam, grunzochse bardzo to doceniają.

- Każda próba wypłaty grozi ich utratą, wraz unces wszelkimi zgromadzonymi wygranymi.

- Najpierw gracz przechodzi do sekcji bonusów i aktywuje benefit.

Warto skorzystać z Vulkan Vegas 50fs," "ponieważ nie wymaga in żadnych wpłat. Po szybkiej rejestracji we potwierdzeniu konta, możesz natychmiast rozpocząć grę. Kasyno często oferuje dodatkowe promocje, a new specjalny Vulkan Vegas kod na darmowe spiny umożliwia zdobycie bonusów na korzystnych warunkach. Po pierwsze, kod promocyjny perform Vulkan Vegas oferuje dodatkowe bonusy i actually premie, które są unikalne dla naszych użytkowników. To oznacza, że możemy otrzymać większe premie pieniężne lub darmowe annoying, zwiększając nasze szanse na wygraną. Pamiętaj, że każdy benefit ma określone warunki, takie jak wymagania dotyczące obrotu, które musisz spełnić, zanim wypłacisz wygrane.

Gry Insta

Jego powitalna kombinacja daje nowemu klientowi zarówno brak depozytu, jak i reward od depozytu. Oceniając na podstawie automatów, które gracz może wykupić, kasyno on the web jest ulubieńcem fanów w obszarach, watts których działa. Ma również inne istniejące bonusy dla klientów, takie jak bonusy lojalnościowe i bonusy doładowań.

- Możliwość skorzystania z kodu promocyjnego jest dostępna tylko dla nowych graczy i obowiązuje przez określony czas.

- Po dokonaniu wpłaty my partner and i jej zaksięgowaniu, bonus powitalny zostaje przyznany i może być wykorzystany na różne gry dostępne watts kasynie.

- Gry hazardowe to specyficzny rynek, który w ostatnim czasie przechodzi ogromne zmiany.

- Możliwość skorzystania z darmowych spinów w tej popularnej grze stanowi dodatkową atrakcję dla miłośników slotów.

- Oznacza to, że musisz postawić kwotę bonusu lub wygrane z darmowych spinów 40 razy, zanim będziesz mógł wypłacić zyski.

- Niе możеsz pobrаć kаsуnа Vylkаn Wеgаc Polskа nа swój tеlеfon.

To znaczący atut dla nowych użytkowników, którzy mogą bez ryzyka wypróbować różnorodne gry i zdobyć nagrody. Pamiętaj, że maksymalna kwota środków bonusowych, którą możesz przekazać do salda prawdziwych pieniędzy wynosi równo 100 zł bez depozytu za rejestracje. Dla graczy Vulkan Vegas kasyno wypłaty są procesowane w sposób błyskawiczny. Po pierwsze, musisz wiedzieć, że darmowe spiny to gry, które nic keineswegs kosztują, ale w których grasz z ustaloną wcześniej stawką. Zawsze zostanie wyświetlona informacja, jak wysoka jest ta wartość. Aby je odebrać, wystarczy postępować zgodnie z instrukcjami i aktywować je.

Zobacz Nasze Najlepsze Oferty Z Innych Kasyn

Oczywiście można też wpłacać mniejsze kwoty, ale wtedy wpłata 1000 euro jest już po prostu nieosiągalna. Vulkan Vegas oferuje swoim nowym klientom imponujący benefit powitalny, który obejmuje aż do european w postaci kredytu bonusowego. Ale bądź ta premia jest rzeczywiście tak silna, jak się wydaje? A może po zapoznaniu się z warunkami przyznawania premii czeka Cię brutalne przebudzenie? Przyjrzeliśmy się dokładnie ofercie bonusowej Vulkan Vegas we powiedzieliśmy Ci, bądź jest to uczciwa oferta, czy raczej powinieneś trzymać ręce z daleka z tej promocji. A jeśli z jakichś powodów nie wpisałeś kodu podczas rejestracji w kasynie, to bez obaw.

- Wielu graczy jest zdania, że NetEnt obok Microgaming to najlepszy dostawca gier hazardowych online.

- W razie jakichkolwiek pytań prosimy u kontakt z naszymi doradcami ds.

- Jeżeli lubisz obstawiać często i wysoko, nasz specjalny program dla graczy VIP unces pewnością spełni Twoje oczekiwania.

- Zachęcamy wszystkich carry out zapoznania się unces naszym regulaminem promocji przed odebraniem dowolnego z wyżej wymienionych bonusów lub odmiennych promocji oferowanych poprzez Vulkan Vegas kasyno.

- Pełna nazwa tej gry to Rich Wilde along with the Book of Deceased i to właśnie nieposkromiony poszukiwacz przygód jest głównym bohaterem tej gry.

Kasyno najczęściej przygotowuje specjalną ofertę dla nowych graczy, która cechuje się wyjątkową przystępnością i actually znacznie lepszymi warunkami użytkowania. Pozostaje jedynie przejść przez proces zakładania konta i w odpowiednim momencie podać otrzymany ciąg znaków. Korzystanie unces kodu promocyjnego t Vulkan Vegas On line casino jest proste i actually nieskomplikowane. Po pierwsze, musisz zarejestrować się na stronie kasyna, aby móc skorzystać z bonusów i actually promocji. Następnie, watts trakcie procesu rejestracji lub później, będziesz miał możliwość wpisania kodu promocyjnego.

En İyi Türkiye On The Internet Casinolar 2024-top Çevrimiçi Casino Rehber

En İyi Türkiye On The Internet Casinolar 2024-top Çevrimiçi Casino Rehberi

"En Kaliteli Casino Siteleri Güvenilir, Lisanslı Ve Popüler

Content

- Türkiye’de Internet Casino Düzenlemeleri

- The Best Türkiye Casinos For 2024 Reviewed

- The Best Turkey Live Casinos For 2024

- Güvenilir Ve Lisanslı Casino Siteleri Nelerdir?

- Casino Sitelerinde Yüksek Kazanç Oranları Nasıl Elde Edilir?

- En Iyi 10 Türk Online Casinoları

- En İyi Casino Siteleri Listesi

- Şeffaf Politikalar Empieza Güvenlik Önlemleri

- Casino Sitelerine Kişisel Bilgileri Vermek Güvenli Mi?

- Online Slotlar Için Sobre İyi Online Gambling Establishment: Casino Metropol

- Ödeme Oranları Ve Denetimler

- Land-based Casinos In Turkey

- Top Türk Çevrimdışı Kumarhaneleri

- En Güvenilir Türk On The Internet Casinolarının Karşılaması Gereken Kriterler

- Vbet Casino

- Harika Kampanyalar, Yüksek Bonuslar İçin En İyi Casino Sitesi: Jetbahis

- Legal Landscape Associated With Online Casinos Throughout Turkey

- Anadolu Casino

- Casino Sitelerinde Yüksek Kazanç Oranları

- Mobil Cihazlar İçin En İyi Online Casino: Mobil Bahis

- Casino Sitelerinin Sunduğu Added Bonus Ve Promosyonlar Nelerdir?

- En Iyi Casino Siteleri

- Casino Sitelerine Nasıl Üye Olunur?

- Güvenilir Ve Lisanslı Casino Sitelerinin Adresleri Nasıl Tanınır?

- Best On The Internet Casinos Turkey Has To Offer Within 2024

- Hızlı Para Çekmek İçin En İyi On Line Casino Sitesi: Betboo

- Online Casino Türkiye’de Yasal Mı?

- Yeni Ve Güvenilir Bahis Sitesi: Intobet

- En Iyi Casino Siteleri Nelerdir?

Online casino sitesi WebbySlot ana sayfasında Curacao lisansına sahip olduğunu görebilirsiniz. Casino sitelerinin adresleri ödeme oranları, oyuncuların en çok önem verdiği konulardan biri. Bu sitelerde, ödeme oranları düzenli olarak denetleniyor ve şeffaf bir şekilde paylaşılıyor. Böylece, oyuncular on line casino siteleri denetim süreçlerine güven duyarak yüksek kazançlar elde edebiliyorlar. Casino siteleri denetim ve casino sitelerinin adresleri şeffaflık konuları, güvenilir ve lisanslı sitelerin en önemli özelliklerindendir. Bu siteler, düzenleyici kurumlar tarafından verilen lisanslar ile oyuncuların haklarını korumayı garanti eder.

Ulaşabileceğiniz reward fırsatlarına gelecek olursak, yeni hesap açan oyuncular ilk afin de yatırma işlemlerini yaptığında 100% Hoş geldin Bonusu kazanıyorlar. 22bet ek olarak, canlı destek ve müşteri temsilciliği hizmetleri sobre sunuyor. Türkiye’de hizmet veren online on line casino sitelerine giriş yaparken uyulması gereken kurallardan biri de yaştır.

Türkiye’de Internet Casino Düzenlemeleri

Uzun süredir Casino Metropol üyesi olanlar çeşitli fırsatlar, eğlenceli turnuvalar ve mükemmel müşteri desteği olduğunu söylüyor. Kumar oynamanın tatlı heyecanı her gün yüzlerce kumar severi birçok online kumarhanede bir araya getiriyor. Online casinoların sunduğu kumar heyecanını tatmak isteyen insanlar en kaliteli online Türk kumarhanelerini aramalıdır. Online casinoların hile yapmadığından emin olmanızın bir başka yolu weil denetlenmeleri ve hatta güvenilir olanların denetim raporlarının görüntülenebilmesidir. Herhangi bir denetime tabi olmayan siteler listemizde yer almamaktadır mostbet.

- Buna rağmen, ülkemizde çok sayıda kumar sitelerini sevenler istediği zaman online olarak, sorun yaşamadan oynayabiliyor.

- Bu sayede, oyuncular huzur ve güven içinde oyun oynayabilirler.

- Yukarıdaki hoşgeldin bonusunu talep etmek için pra yatırmanız şarttır.

- Ayrıca, ödeme oranları düzenli olarak denetlenmekte ve şeffaf bir şekilde paylaşılmaktadır.

- 1xSlots Hoş geldin Bonusunu 1500 European" "olarak yeni hesap açan kullanıcılarıyla paylaşırken, bunun yanında 30 Ücretsiz Döndürme de sunuyor.

Seçtiğimiz Türk çevrimiçi kumarhaneleri ülkenizde bulunanlardan çeşitlilik empieza depozit/bonus açılarından daha iyi olabilir. Casino siteleri müşteri memnuniyeti, güvenilir ve lisanslı sitelerin temel hedefidir. Oyuncuların sorunlarına hızlı ve etkin" "çözümler üretmek, bonus ve promosyonlar sunmak, üstün müşteri hizmeti sağlamak, bu sitelerin vazgeçilmez unsurlarıdır. Böylece, oyuncular kendilerini değerli hisseder ve casino deneyimlerinden memnun kalırlar. TR. Casinority. Com, online casino alanında bağımsız bir inceleme sitesidir. Casinolar, bonuslar ve online casino oyunları incelemeleri sunuyoruz.

The Best Türkiye Casinos For 2024 Reviewed

Bu sitelerin lisansları, oyuncuların haklarını korumak ve adil bir oyun deneyimi sunmak için tasarlanmıştır. Güvenilir gambling establishment siteleri ve lisanslı casino siteleri, şeffaf politikaları, güvenlik önlemleri ve müşteri memnuniyeti odaklı yaklaşımları ile öne çıkmaktadır. Böylece, oyuncular casino sitelerinin adresi güvenlik ve on line casino siteleri müşteri memnuniyeti konularında güvenle hareket edebilirler. %100 afin de yatırma bonusları, neredeyse bütün casino sitelerinin sunduğu en popüler bonus türüdür. Casino siteleri inceleme yaparken, bu sitelerin sunduğu olanaklar ve avantajlar dikkate alınmalıdır. Yukarıdaki hoşgeldin bonusunu talep etmek için em virtude de yatırmanız şarttır.

Bu yüzden bu sayfada en sağlam online casino siteleri listesi hazırladık ve siteleri seçerken hangi kriterlere dikkat ettiğimizi aşağıda detaylıca anlattık. En iyi siteler listesine aşağıda göz attıktan sonra detayları inceleyebilirsiniz. Türkiye Casinority kataloğundaki casinolar gerçek parayla oynamak içindir ve yalnızca kaybetmeyi göze alabileceğiniz parayı yatırmanız gerekir. Kumar oynamanızı kontrol etmek için" "em virtude de yatırma limitleri ya da kendi kendini dışlama gibi araçlar kullanın. Kumar bağımlılığınız varsa, mutlaka bir kumar bağımlılığı yardım merkezine başvurun ve gerçek parayla oynamayın. Geri ödeme bonusları, bahisleri kaybeden kullanıcılar için bir şans daha verir.

The Best Chicken Live Casinos With Regard To 2024

Burada poker için the girl zaman bir turnuva veya masada boş bir koltuk bulabilirsiniz. Ayrıca Texas Hold’Em Poker veya Türk pokeri gibi pokerin bütün türleri burada yer almaktadır."

- Casino Metropol, slot oynamak isteyenler için en iyi on line casino sitesidir.

- Bu nedenle para yatırma ve çekme işlemleriniz güvenli bir şekilde tamamlanıyor.

- Yani biz kazansak da kaybetsek de belirli bir oran, on line casino sitesinin kasasına gider.

- Sosyal medyada adını böylece çok kez gördüğümüz Betboo, yüzlerce yeni üye kazandı.

Paranızı siteye yatırmada ve paranızı çekme konusunda sıkıntı yaşamamak için de" "bu konu önemlidir. Casino tutkunlarının, güvenli empieza kazançlı bir deneyim için bu siteleri tercih etmeleri önerilir. Yüksek kazanç oranları casino siteleri, oyuncuların beklentilerini karşılayarak Türkiye’deki casino sektörünün gelişimine katkı sağlamaktadır. Türkiye’nin en iyi gambling establishment siteleri, oyuncuların güvenini kazanmayı başarmış, denetlenen ve kaliteli hizmet sunan platformlardır. Bu sitelerin tercih edilmesi, güvenli ve kazançlı bir deneyim elde etmek için önemlidir. Güvenilir ve lisanslı casino siteleri, oyuncuların güvenini kazanmış empieza düzenleyici kurumlar tarafından onaylanmış sitelerdir.

Güvenilir Ve Lisanslı Casino Siteleri Nelerdir?

Ek olarak, sizlerle paylaştığımız yasal düzenlemeler empieza patolojik kumar hakkında verilen bilgilere göz atmanızı öneririz. Türkiye’nin önde gelen casino siteleri, oyuncularına yüksek kazanç oranları sunmaktadır. Bu sitelerin sahip olduğu geniş oyun kategorileri ve çeşitliliği, farklı tercihlere sahip oyuncuların ihtiyaçlarını karşılamaktadır. Ayrıca, ödeme oranları düzenli olarak denetlenmekte ve şeffaf bir şekilde paylaşılmaktadır. Bir kumarhane sitesine girdiğinizde adınız, adresiniz empieza doğum tarihiniz gibi çeşitli bilgiler talep edilebilir.

Bu nedenle para yatırma ve çekme işlemleriniz güvenli bir şekilde tamamlanıyor. Herhangi bir sorun sırasında, Türkçe dilinde oynanan oyunlar ve Türkçe destek hizmeti sunan Anadolu Casino’da, istediğiniz desteği görebilirsiniz. Güvenilir ve lisanslı casino sitelerinin adresleri, şeffaf politikaları empieza güçlü güvenlik önlemleri ile dikkat çeker. Oyuncuların kişisel empieza finansal verilerinin korunması, adil oyun kurallarının uygulanması ve ödeme işlemlerinin güvenliği, bu sitelerin öncelikli konularındandır. Bu sayede, oyuncular huzur ve güven içinde oyun oynayabilirler.

Casino Sitelerinde Yüksek Kazanç Oranları Nasıl Elde Edilir?

Spor bahisleriyle bilinse de casino oyunları arasında aradığınız the woman şeyi bulabilirsiniz. Casino Metropol yine karşımıza çıkan resmi oyun oynatma lisansına sahip en kaliteli, güvenilir ve en çok kullanıcı sayısına sahip olan Türk on-line casino sitelerinden. Casino Metropol online kumarhane sitesinde Türk Lirası ile birbirinden ilginç oyunlar oynayabilirsiniz. Sonuç olarak, Türkiye’deki sobre iyi casino sitelerinin adresleri, güvenilir, lisanslı ve yüksek kazanç oranları ile öne çıkarak oyuncular için cazip bir seçenek haline gelmektedir. Bu siteler, casino tutkunlarına güvenli ve kazançlı bir deneyim sunmak için çalışmaya devam edecektir.

- Casinolar, bonuslar ve online casino oyunları incelemeleri sunuyoruz.

- Bazı casino siteleri ise yatırımsız bonus sunarak, para yatırmaksızın bonus vermektedir.

- 22Bet çevirimiçi kumarhanesinde Türkçe oyunlar oynayabilir empieza Türkçe müşteri desteği alabilirsiniz.

- Daha çok spor bahisi yapanlar için ideal olan bu sitede birçok bilgi var.

Bu yüzden bonuslar hakkında biraz daha bilgi vermek istedik. Aşağıda Türkiye’de hizmet veren sitelerin sunmuş olduğu bonus türlerini görebilirsiniz. Online canlı casino sitelerinde çoğu sisteme kullanıcı müdahale edemez. Bu yazılımlara hiçbir gerçek kullanıcı giriş yapıp değişiklik yapamaz. İlk olarak, tüm casinoların “ev avantajı” olarak adlandırılan bir özelliği vardır. Bu özellikle casinoların hile yapmasına gerek kalmaz çünkü oyun nenni olursa olsun her zaman kazanırlar.

En Iyi Twelve Türk Online Casinoları

Bu online casino sitelerinin lisanslarını veren kuruluşlar tarafından talep edilmektedir. Amaç oyuncuları korumaktır ve reşit olmayan kişilerin kumar oynaması, kara afin de aklaması ve bağımlılıkları önlemek için çeşitli önlemler almaktır. Aşağıda bir online online casino sitesine kaydolmak ve oynamak için izlemeniz gereken adımları görebilirsiniz. Eğer bahis dünyasında birkaç yıldan fazla zaman geçirdiyseniz, Süperbahis’i duymama ihtimaliniz yoktur. Çünkü Süperbahis yasal ve en eski casino sitelerinden biridir.

- TG Research laboratory Spor bahisleri altyapısını kullanan IntoBet, her ay 3. 1000 TL’ye kadar added bonus veriyor.

- Oyuncuların sorunlarına hızlı ve etkin" "çözümler üretmek, bonus ve promosyonlar sunmak, üstün müşteri hizmeti sağlamak, bu sitelerin vazgeçilmez unsurlarıdır.

- İlk para yatırma işleminde kendini ve sektörü aşarak a single. 500 TL’ye kadar %300 bonus veren Hovarda bir anda dikkatleri üzerine çekti.

- Online casino sitesi WebbySlot ana sayfasında Curacao lisansına sahip olduğunu görebilirsiniz.

- İlk olarak, tüm casinoların “ev avantajı” olarak adlandırılan bir özelliği vardır.

"Türkiye’de casino sektörü hızla büyüyor ve popülaritesi artıyor. Güvenilir, lisanslı ve yüksek kazanç oranlarına sahip online casino siteleri, oyuncular için cazip bir seçenek haline geliyor. Bu makalede, Türkiye’nin en iyi, güvenilir ve lisanslı casino sitelerini inceleyeceğiz.

En İyi Casino Siteleri Listesi

Misyonumuz, sizi durante güvenli ve en güvenilir casinolara bağlayarak kumar deneyiminizi başarılı kılmaktır. Türkiye’nin en iyi online kumarhanelerini bu yazımızda sizler için derledik. Oyun severler Webby Slot, Game playing Club ya weil CasinoMaxi gibi güvenilir Türkçe çevrimiçi kumarhanelerde buluşabilir. TR. Casinority üzerinden ücretsiz trial oyunlarini oynayabilirsiniz. WebbySlot Türk online on line casino oyuncuları için güvenilir hizmet sunan on the web casinolardan biri.

- Ayrıca, bu sitelerin sunduğu yüksek kazanç oranları, çeşitli bonus ve promosyonlar, hızlı ödeme seçenekleri ve mobil uyumlu oyun deneyimleri hakkında bilgi vereceğiz.

- Casino siteleri inceleme yaparken, bu sitelerin sunduğu olanaklar ve avantajlar dikkate alınmalıdır.

- Eğer bahis dünyasında birkaç yıldan fazla zaman geçirdiyseniz, Süperbahis’i duymama ihtimaliniz yoktur.

Mehmet, Kıbrıs’ta yaşayan empieza casino dünyasının içinden gelen bir yazılım uzmanıdır. Uzun süre casino altyapılarını sağladıktan sonra, deneyimlerini paylaşmak için casinotr. survive sitesini kurmuştur. Bu yüzden yazıların deneyimli ellerden çıktığına emin olabilirsiniz. Kıbrıs’ta yüksek lisans hayatına devam ederken, casino blog site sitesinin gelişimine para vakit ayırmaktadır. Bu bonuslar nakit, ücretsiz çevrim (free spin) veya kaybedilen bahislerde geri ödeme şeklinde olabilir. Bol miktarda bonus, kripto pra birimi kazançları, ödül programları ve diğer harika fırsatlar göreceksiniz.

Şeffaf Politikalar Empieza Güvenlik Önlemleri

22Bet, dikkate aldığımız filtrelere göre ön plana çıkan en iyi Türk çevrimiçi kumarhane sitelerinden biridir. 22Bet çevirimiçi kumarhanesinde Türkçe oyunlar oynayabilir empieza Türkçe müşteri desteği alabilirsiniz. Gaming Golf club %100 Hoş geldin Bonusunu yeni hesap açan kullanıcılarıyla buluşturuyor. Ayrıca haftanın the woman günü farklı bir özel bonus ile kullanıcılarını buluşturmaya çalışıyorlar. Gaming Club, Malta Gaming Otoritesi altında şirket kaydına sahip güvenilir Türk on the web kumarhanelerinden biri olarak sayılabilir. Bu nedenle Türk oyuncuları güvenle Gaming Club online casinosunda oynayabilirler.

- Aşağıda bu sitelerin özelliklerini, detaylı bilgilerini ve bonuslarını da aktarıyoruz.

- Bu siteler, düzenleyici kurumlar tarafından verilen lisanslar ile oyuncuların haklarını korumayı assurée eder.

- Türkçe canlı rulet ya da Türkçe Blakjack lobisine katılabilir hoy da canlı casinolarda oyun oynayabilirsiniz.

- Tablomuzda bu ayın en iyi casino siteleri listesini paylaştık.

Çevrimiçi kumar incelemelerinden yararlanarak bir on the web kumarhaneye güvenmek konusunda dikkate alınması gereken birçok sayıda kriterin olduğunu söylemiştik. Bu noktada mevzu bahis kriterlerin hangi konuları ve ne gibi detayları kapsadığından bahsetmeliyiz. Bunun farkındayız empieza değerlendirmelerimizi de buna göre yaptık. Casino Maxi, poker sevenler için en iyi odaları sunmaktadır.

Casino Sitelerine Kişisel Bilgileri Vermek Güvenli Mi?

Ayrıca, düzenli olarak denetlenen ve güvenilirliği onaylanmış casino siteleri Türkiye‘de, oyuncular güvenle vakit geçirebilirler. CasinoMetropol oldukça geniş güncel kampanya seçenekleri sunuyor. Türkçe canlı rulet veya Türkçe Blakjack lobisine katılabilir ya da canlı casinolarda oyun oynayabilirsiniz. Casino Metropol MGA lisansına sahip olan sitelerden biridir. Türk Lirası ile WebbySlot on the internet casino sitesinde oyun oynayabilirsiniz.

Böylece Türk kullanıcılar, Türkiye’de hizmet veren kumarhane sitelerinden oynuyorlar. Kuşkusuz, internet casino sitelerinde oynayan oyuncular, bunun Türkiye’de yasal olup olmadığını merak edebilirler. Net olarak söyleyebiliriz ki online casino siteleri Türkiye’de yasal değildir. Top Türk çevrimiçi kumarhaneleri belirli kriterlere göre filtrelenmelidir. Bunun sebebi casinos sitelerinin birbirinden farklı özelliklere, bonuslara sahip olmasıdır. Biz TR. Casinority ekibi olarak bütün bu kriterleri göz önüne alarak en iyi online kumarhanelerini sizler için seçtik.

Online Slotlar Için En İyi Online Casino: Casino Metropol

Türkiye’de kumar oynatmak yasal olmasa da internet casino sitelerinde oyun oynarken 18 yaşından büyük olmak gerekmektedir. 18 yaşından büyük olmayan kullanıcılar on the web casinolarda hesap açamaz ve oyun oynayamazlar. 1xSlots Hoş geldin Bonusunu 1500 European" "olarak yeni hesap açan kullanıcılarıyla paylaşırken, bunun yanında 30 Ücretsiz Döndürme de sunuyor. Hala ihtiyaçlarınıza uyan çevrimiçi bir kumarhane mi arıyorsunuz?

- Burada sağlam bir casino sitesi bulacağınıza emin olabilirsiniz.

- Kıbrıs’ta yüksek lisans hayatına devam ederken, casino blog site sitesinin gelişimine de vakit ayırmaktadır.

- Paranızı siteye yatırmada ve paranızı çekme konusunda sıkıntı yaşamamak için sobre" "bu konu önemlidir.

- Casino Maxi, poker sevenler için en iyi odaları sunmaktadır.

Kriterlerimiz tabi ki sadece web sitelerine üye olup gezinmekten ibaret değil. Her bir siteyi nasıl değerlendirdiğimizi biliyoruz ve neyin işe yarayıp yaramadığını öğrenmek için her sitede saatler harcıyoruz. Eski üyeleri çok fazla olduğu için kullanıcıların davranışlarını değiştirmemek adına tasarım olarak pek değişiklik yapmıyorlar.

Ödeme Oranları Ve Denetimler

Türkçe dilinde canlı casinolarda oyun oynama keyfine varabilirsiniz. Yardımsever empieza kibar olan Türkçe müşteri desteği para 1xSlots online casino sitesinin gurur duyduğu özellikleri arasında yer alıyor." "[newline]Türkiye’nin en iyi on line casino siteleri, oyuncuların beklentilerini karşılamak için sürekli çalışıyor. Bu siteler, geniş oyun yelpazesi, yüksek kazanç oranları, cazip bonus ve promosyonlar, hızlı ödeme seçenekleri ve mobil uyumlu deneyimler sunuyor.

- Site seçerken ister hızlıca tablodan seçin, isterseniz de aşağıdaki değerlendirmeleri okuyarak karşılaştırma yapın.

- Oyuncuların kişisel ve finansal verilerinin korunması, adil oyun kurallarının uygulanması ve ödeme işlemlerinin güvenliği, bu sitelerin öncelikli konularındandır.

- Bu nedenle Türk oyuncuları güvenle Gaming Club on-line casinosunda oynayabilirler.

- Telefondan casino oyunlarını oynamayı sevenler için özel oluşturulmuş bir site de va listemizde.

- Top Türk çevrimiçi kumarhaneleri listemizde son olarak VBet Casino on the internet kumarhanesini sizlerle buluşturmak isteriz.

Türkiye’deki en iyi canlı casinolar, kayıt sürecini kolaylaştırmak için ellerinden geleni yapıyorlar. Yeni casino sitelerinde hesap açmanız, para" "yatırmanız ve gerçek paralı oyunlar oynamaya başlamanız birkaç dakikanızı alacaktır. Yine de biz hesap oluşturmak için gereken ayrıntıları verelim. Hangi sitenin gerçekten para ödediği, hangisinin ödemediği anlamak kullanıcılar için önemlidir. Aradaki en büyük farklardan biri, bazı gambling establishment siteleri hiçbir şirket kaydı olmadan faaliyet gösterirken, diğerlerinin bir şirket kaydına sahip olmasıdır.

Land-based Casinos In Turkey

Ancak bazı online kumarhaneler free spinleri kullanabileceğiniz slot oyunlarını sınırlamaktadır. Casino oyunlarının tamamını bulabileceğiniz, uzun süredir hizmet veren bu site, bonuslarıyla weil ön plana çıkmaktadır. Casino Metropol, tüm klasik casino oyunları dahil olmak çok fazla çeşitte slot machine game makinelerine sahiptir. Online casino oynamak isteyen insanlar, her gün yeni açılan casino sitelerinin adresleri ile karşılaşıyor. Casino oynamak için güvenilir birçok site va iken, yasal olmayan ve dolandırıcı gambling establishment sayısı da bir hayli fazla.

- Herhangi bir sorun sırasında, Türkçe dilinde oynanan oyunlar ve Türkçe destek hizmeti sunan Anadolu Casino’da, istediğiniz desteği görebilirsiniz.

- Hovarda gambling establishment, en popüler empieza en çok tercih edilen casino oyunları odağında kurulmuş.

- Lisanslar, adil oyun deneyimi ve finansal güvenlik için büyük önem taşır.

- Ancak bazı online kumarhaneler free spinleri kullanabileceğiniz slot oyunlarını sınırlamaktadır.

Katılabileceği destek grupları bulabilir, daha sağlıklı kararlar almak için yardım alabilir. Üyeliğiniz tamamlandığı an hesabınızda bahis yapabileceğiniz 50 TL’niz olabilir. Bu added bonus türü kendi paranızı yatırmanıza gerek" "kalmadan bir sitenin özelliklerini keşfetmenin güzel bir yöntemidir. Muhtemelen slot machine game oyunları için geçerli olan ödeme yüzdesini duymuşsunuzdur. Bu oran o oyunu oynayanların %97’sinin kazanacağını empieza %3’ünün kumarhaneye geri döneceği anlamına gelir. İlk para yatırma işleminde kendini ve sektörü aşarak a single. 500 TL’ye kadar %300 bonus veren Hovarda bir kita dikkatleri üzerine çekti.

Top Türk Çevrimdışı Kumarhaneleri

Ayrıca, bu sitelerin sunduğu yüksek kazanç oranları, çeşitli bonus ve promosyonlar, hızlı ödeme seçenekleri ve mobil uyumlu oyun deneyimleri hakkında bilgi vereceğiz. Türkiye’deki en iyi casino siteleri, güvenilir, lisanslı ve yüksek kazanç oranları ile öne çıkmaktadır. Bu siteler, oyuncuların ihtiyaçlarını karşılayan geniş bir oyun yelpazesi, cazip bonus ve promosyonlar, hızlı ödeme seçenekleri ve mobil uyumlu deneyimler sunmaktadır. Bets10, bahsettiğimiz kriterleri karşılayan en iyi Türk çevrimiçi kumarhaneleri arasına adını yazdırıyor. Yeni üyelerine hoş geldin bonusu seçenekleri arasında seçme şansı tanıyor. Bu seçenekler arasında 200Türk Lirası’na kadar %200 Bonus, one thousand Türk Lirası’na kadar %100 Bonus gibi yatırdığınız parayı ikiye üçe katlayan kampanyalar var.

Bazı casino siteleri ise yatırımsız bonus sunarak, para yatırmaksızın benefit vermektedir. Yani üye olur olmaz hesabınızda parayla karşılaşabilirsiniz. Bonuslar, en iyi casino sitelerinin adresleri incelememizde önemli bir husus oldu.

En Güvenilir Türk On The Internet Casinolarının Karşılaması Gereken Kriterler

Lisanslar, adil oyun deneyimi ve finansal güvenlik için büyük önem taşır. Türkiye’nin en iyi canlı casino sitelerinde yüzlerce gerçek para kazandıran slot oyunu var. Bazıları çok heyecanlı, bazıları eğlenceli, bazıları ise oynadıkça oynatıyor. Tabii ki Türkiye’deki kumarhanelerin tamamen yasa dışı olduğunu bir kez daha hatırlatmalıyız. Bu nedenle kayıt olacağınız on-line casinonun güvenilir empieza iyi bir çevrimiçi kumarhane olmasına dikkat etmek çok önemlidir. Kaliteli online kumarhanelerde oynadığınızda kişisel bilgileriniz şirket tarafından tamamen korunacaktır.

- Süperbahis ile ortaklıkları bulunan Betboo, Ashton Sins ve empieza Mia Khalifa gibi isimlerle reklam anlaşması yaparak ismini duyurdu.

- Kriterlerimiz tabi ki sadece web sitelerine üye olup gezinmekten ibaret değil.

- 18 yaşından büyük olmayan kullanıcılar on-line casinolarda hesap açamaz ve oyun oynayamazlar.

- Türkiye’nin önde gelen on line casino siteleri, oyuncularına yüksek kazanç oranları sunmaktadır.

- Güvenilir gambling establishment siteleri ve lisanslı casino siteleri, şeffaf politikaları, güvenlik önlemleri ve müşteri memnuniyeti odaklı yaklaşımları ile öne çıkmaktadır.

Blackjack masaları, rulet, video poker, bingo ve jackpot gibi birçok seçenek dahil olmak üzere 200’den fazla oyun Price cut Casino’da sizi bekliyor. Sitemiz TR. Casinority. com üzerinde Türk oyuncular tercihlerine göre online casino seçmek için filtreleri kullanabilir. Lisanslı bir casinoda oynadığınız sürece bilgilerinizi vermekte bir sakınca yok. Çünkü the woman casino operasyonlara başlamadan önce kapsamlı bir incelemeden geçmektedir. Eğer bir site dimensions 50 free spin bonus sunduysa, bu bonusla herhangi bir slot oyununa girip 50 kere makinayı ücretsiz döndürebilirsiniz.

Скачать Мостбет На Андроид Android Скачать Мобильное Приложение Mostbet а Androi

Скачать Мостбет На Андроид Android Скачать Мобильное Приложение Mostbet а Android

Скачать Мостбет Приложение С официальному Сайта Бесплатно на Андроид Apk Бк Мостбет

Content

- Онлайн Казино

- Регистрация В Mostbet

- Как В Приложении Оформить Ставку%3F

- Как бесплатно Установить Мостбет а Андроид%3F

- актуально Рабочее Зеркало Мостбет На Сегодня

- Функционал Мобильного Приложения «мост Бет»

- мне Ли Я сделано Ставки На международные Спортивные События а Mostbet%3F

- Являлась Ли Мостбет Лицензированным Казино%3F

- Скачать Бк Мостбет - Инструкция Из 3 Шагов

- Преимущества Приложения Мостбет На Андроид

- надежно Источники Для поиска Резервных Сайтов

- гарантии От Mostbet

- Скачать Приложение Mostbet Для Android ( Apk Файл)

- Бонусы Mostbet Welcome

- Mostbet Приложение ддя Ios И Android%3A Инструкция По Скачиванию И Установке

- Мостбет - Онлайн Букмекерская Компания и Ставками На Спорт И Казино

- Обзор Востребованных Игровых Автоматов

- Скачать Приложение Мостбет На Телефон – Мобильная Версия а Андроид

- недостатки Mostbet

- С Официального Сайта Компании (мостбет Ru)

- Приложение Mostbet для России

- Основные возможности Mostbet

- Получи 100 Fs За Установку Приложения Mostbet

- преимущества Скачивания

- только Найти И Скачать Зеркало Mostbet и Андроид%3F

- Mostbet Apk Skachat%3A тюркеншанцпарк Найти Приложение предлагается И Без Вирусов%3F

- Отзывы семряуи Программе Mostbet

- Регистрация и Приложении Мостбет

- Новости Букмекеров

Нормализаторской перейдите в купон по ссылке внизу экрана%2C укажите суммы%2C тип пари и подтвердите действие. Чтобы попасть в его%2C вызовите главное меню и нажмите а свой ID-номер. Суппорт Мостбет отправляет ссылку в зеркало ддя неподалеку день только а той другой день дня. Имела высокую популярность лучших развлечений%2C эта корыстных успешно посредством. Предназначе этого следует идешь в любой браузер и восстановить выбрано запрос а поисковой строке.

- Пройти ее могут все пользователи%2C опытнее 18 лет нет игровой зависимости.

- Если отыгрывать бонус дли ставок на спорт%2C то действует целую дополнительных правил.

- Актуальный линк дли перехода и сайт букмекера (как и линк и приложение) пользователь найду и этой странице%2C что облегчит а нет того простых задачу.

- При использовании текстовых материалов сайта гиперссылка на Sport. ua обязательна.

- Например%2C беспроблемный доступ к порталу и ко всему функционалу%2C и игроку даже нужно беспокоиться об доступе.

- Также лобом один выбраны способов пользователь либо ввести промокод%2C только получить дополнительно барыши от букмекерской конторы.

Перезакуплен пользователь прочитает исчерпывающий обзор программы с оценкой преимуществ и недостатков. ✔️ Ддя известных%2C ” “не и создал аккаунт а десктопном сайте БК%2C новая регистрация только нужна. ✔️ Затем нужно перестраховаться%2C позволял ли устройство загружать файл один стороннего источника. Если ставка сыграла%2C букмекер сперва перечислит выигрыш а счет беттера мостбет скачать ios.

Онлайн Казино

Отличие состояла в расположении функционала мобильного и web приложения. В мобильное приложение можно идти с неработающем интернетом%2C правда большинство функций будет недоступно%2C даже сама игра легче на ПК%2C ним счет большого экрана. Мобильные приложение дли букмекерских контор – это удобный методом делать ставки прямо со своего гаджета.

- Вы можете открыть игровой портал в поездке%2C в даче и не в другой мире мира.

- А первом случае читатель скачивает программу с официальной каталога AppStore.

- Определенная версия совместима пиппардом устройствами iPhone%2C iPod Touch и iPad с прошивкой iOS от 11. 0 и выше.

- Проследовав по ссылке на актуальное зеркало казино%2C вы сможете никаких проблем скачать приложение Most Bet а свой Андроид одноиз Айфон%2C обойдя эти блокировки сайта.

Как букмекер%2C он случалось демонстрировал себе со положительной и%2C предлагалось клиентам доступ прочему множеству интересных пределе. Мостбет считается словом из немногих кидальных казино — толализатор в" "интернете. Кроме этого%2C весьма важно условии гарантирующее и надежность предназначенного способа оплата%2C только также конфиденциальность этих финансовых же личной данных. Пользователям только Мостбет нельзя пытливо познакомиться с правилами клуба приложение мостбет скачать бесплатно.

Регистрация В Mostbet

Мобильная программа для телефона или планшета полностью заменяет весь функционал официального сайта. Привычное установка из Play Market для гэмблинг-приложений недоступна. Мостбет скачать приложение на Андроид стоит также%2C этого быстрее отыгрывать полученные бонусы. Приложение позволяли делать отыгрышные ставки%2C находясь в том месте. Приложение предоставляет широкий спектр спортивных событий и рынков%2C чтобы удовлетворить национальные" "пользователей и обеспечить разнообразие в выборе ставок.

Для недискриминационных непрерывного доступа к своим услугам%2C компания Mostbet создает нестандартные сайты. Эти зеркала могут иметь разные веб-адреса%2C иногда даже случайный набор символов%2C например 777 например 888. Ссылки и актуальные%2C проверенные дополнительные ресурсы MostBet же 2024 году собрано в подборке на нашем сайте.

Как В Приложении Оформить Ставку%3F

Благодаря предложениям от ведущие провайдеров%2C таких как NetEnt%2C Microgaming только Evolution Gaming%2C APK Mostbet обеспечивает солидный и увлекательный опыт. Приложение также направляет" "более 100 криптовалют%2C вплоть Bitcoin и Ethereum%2C для безопасных же быстрых транзакций. Удобно интерфейс и надежно меры безопасности делаю его надежным выбор для мобильных игр.

Найдя программу%2C попрошу обновления а установите все имевшиеся%2C следуя инструкциям в экране. Приложение Mostbet недоступно для загрузки со официального сайта например из App Store для устройств iOS (для устройств Android). Разработчики создали софт для двух лучших ОС%2C но и этой статье наисерьезнейшем пойдет об Мостбет Андроид.

Как бесплатно Установить Мостбет а Андроид%3F

Программу для ios нельзя скачать по ссылке на сайте%2C ее переадресует гостя а сервис AppStore. Реальная версия совместима пиппардом устройствами iPhone%2C iPod Touch и iPad с прошивкой iOS от 11. 0 и выше. Используя установленное приложение нельзя сразу после распаковки и его проверки на безопасность.

- Только%2C что потребуется сделали пользователю – кликнуть на иконку скачивания%2C после чего случится процесс загрузки а" "установки.

- Зеркало предоставляет полный доступ ко всем функциям букмекерской конторы и создано для лучших%2C кто хочет пользоваться услугами Мостбет никаких ограничений.

- Да%2C вы можете скачать мобильное приложение Мостбет даже если официальному сайт заблокирован.

- Последний может сделали ставку%2C если пройдет процедуру регистрации не тогда запросишь деньги только вывод верификацию а пройдешь не.

Подборка матчей а лайве также идентична%2C а кэфы оперативно обновляются. Скачать последнего версию программа для Android можно вопреки прямой ссылке в портале БК. Чтобы получить максимальный опыт и воспользоваться всеми последними функциями только обновлениями%2C очень важно постоянно обновлять приложение Mostbet. До чтобы как подавать заявку%2C рекомендуем ознакомиться киромарусом условиями конкретного турнира. Если ни который из указанных способов не помог сделать доступ к игровому порталу%2C предлагаем воспользоваться альтернативой. Чтобы сделать ставку в приложении Мостбет%2C вызовите важнее меню%2C откройте раздел «Спорт»%2C выберите немаловажное и нажмите а подходящий рынок.

актуальным Рабочее Зеркало Мостбет На Сегодня

Процесс установки также прост%2C аналогичные действиям на платформе Android. Сейчас пиппардом официального сайта компании можно скачать Mostbet apk для двух современных версий операционной системы. Программа периодически обновляется%2C чтобы него пользователей были шире возможности и приемлемый доступ к любимого ставкам в какой момент. Приложение «Мостбет» обеспечивает удобный а быстрый доступ второму сервису БК через" "мобильные устройства из любой точки мира. Ноунсом своей функциональности должно ничем не уступает основному сервису%2C только при этом позволяет делать ставки только следить за спортивными событиями прямо менаджеру своего гаджета.

- Мы понимаем%2C но в процессе игры могут возникнуть вопрос или проблемы%2C только всегда готовы сделать.

- Чтобы участвовать и акции%2C зарегистрируйтесь а сайте Mostbet только делайте ставки в матчи Евро 2024 с коэффициентом ото 1. 3.

- Игроки могут наслаждаться разнообразием слотов%2C настольных игр и игр с живыми дилерами%2C а также огромной спортивной книгой.

Юзеру даже надо каждый прошлый заходить в браузер%2C искать зеркало%2C регулярно небезопасные сайты%2C только принимать данные ддя неподалеку. Mostbet так ВИП услуги букмекерской конторы%2C а также игровые автоматы%2C казино%2C покер и безусловно это. Приложение Mostbet невозможно обновить%2C искал но в App Store (для устройств iOS) или в официальном сайте (для устройств Android). Искал программу%2C найдите обновления и установите только имевшиеся%2C следуя следуя на экране. Свой ассортимент игр только азартных автоматов создает коллекцию современных слотов%2C ” “классические игровые эмуляторы и такая live аппараты. Помнишь%2C только залогиниться смогли а те пользователи%2C их прошли случае регистрации и подтвердил данные.

Функционал Мобильного Приложения «мост Бет»

Коэффициенты в приложении оперативно обновляются%2C что позволяли игроку быстро говорить на любой неподходящий игры. Загрузка клиента совершенно бесплатна%2C и скачанный «Мостбет» максимум не несет а себе вирусов. Только%2C что потребуется сделали пользователю – кликнуть на иконку скачивания%2C после чего произойти процесс загрузки только" "установки. Применение мобильной программы позволит в немного кликов получать доступ к линии и не зависеть ото возможных ограничений только блокировок. Приложение – это%2C своего рода%2C зеркало%2C которое убирает необходимость постоянного поисков рабочего домена.

- Тогда ваши смартфоны имеем характеристики хуже%2C чем названные%2C скорее меньше%2C приложение будет работать некорректным или только полдела работать вообще.

- Скачать конца версию программа ддя Android можно ноунсом прямой ссылке на портале БК.

- Зеркало Мостбет отличается от официального сайта казино только своим доменным именем%2C или этом все функциональные характеристики полностью сохраняются.

- Прежде чем использовать бонус%2C необходимо прочитать только понять правила а условия%2C которые применяются к данному конкретному предложению.

- Небольшую прочим можно заметить же в скорости обработки транзакций%2C времени ответа службы" "поддержке и частоте обновлений.

Далее тогда покажем вам пошагово%2C как без составило загрузить и установить приложение букмекера. APK Mostbet для устройств Android обеспечивает особый игровой опыт%2C адаптированный для пользователей в России. Разработанный усовершенство удовлетворения потребностей же новичков%2C так и опытных игроков%2C APK предоставляет доступ ко широкому спектру казино-игр и вариантов ставок на спорт.

мне Ли Я сделали Ставки На российские Спортивные События в Mostbet%3F

Беттеры отмечают%2C но перечень возможностей%2C помогающих дли пользователей%2C а приложении значительно больше%2C чем на официальном сайте. Чтобы иметь бонус в приложении Mostbet%2C вы могло выберет бонус%2C ним вам воспользоваться%2C и выполнить" "обстоятельства%2C высказанные в положениях же условиях. Туда не понадобится тратить время на многоходовые блокировки и розыски неофициальных копий. Странный сокращение «apk» — как расширение архивированного файла%2C который а скачивает пользователь. Приложение спецзаказу отформатировано самого этого вида%2C того процессы загрузки же установки происходили резво и безопасно. Сначала заметим%2C что бонусы не бесплатные%2C но них отыгрываются по вейджеру%2C установленному букмекером.

- Этого обзавестись этим способом неподалеку%2C для регистрации может быть выбраны способом «Через соцсеть».

- Перезакуплен пользователь прочитает исчерпывающий обзор программы с оценкой преимуществ и недостатков.

- Минимальная сумма ставки содержит 500 RUB например эквивалент в со валюте.

Редакция проекта может не разделять собственное авторов и не несет ответственности за авторские материалы. Не эти советы должно улучшить вашу стратегию%2C не существует верного метода%2C гарантирующего успех. Всегда играйте глубочайшим и получайте удовольствие от игры почтением. Вы можете подписаться на email-рассылку спасась Mostbet для принятия актуальных ссылок а рабочие зеркала например присоединиться к телеграм-каналу Most Bet. А крайнем случае%2C невозможно обратиться напрямую и службу поддержки Mostbet для получения необходимой информации. Если но успеть отыграть бонус на 100%%2C снабдить им не сможем."

Являлась Ли Мостбет Лицензированным Казино%3F

Открыв приложение для андроид%2C вы смогут проанализировать с него функционалом%2C а регрессной особенности дизайна а интерфейса. Приложение Mostbet можно обновить%2C искал его в App Store (для устройств iOS) или и официальном сайте (для устройств Android). Только приложение советует пользователям удобный интерфейс%2C зарухом делает процесс ставок на спортивные случаи максимально простым только интуитивно непонятным. Тематические порталы” “том беттинге тоже оставляете ссылки%2C но здесь главных роль играет кредиту доверия. А четвертом случае читатель скачивает программу с официальным каталога AppStore.

Псевдорасследование отыгрыша вы сможете использовать только начисленные средства%2C этого сделать ставку. Далее сами подтверждаете свой преклонный и согласие с условиями сайта%2C же на вы почту придет ссылка%2C судя которой нужно сделано перейти. Подтвердив регистрацию%2C вы смогут сносном пользоваться функциями сайта и приложения.

Скачать Бк Мостбет - Инструкция Из 3 Шагов

Момент софт дает беттерам доступ ко всем возможностям официального сервиса «Мостбет» через смартфоны или планшеты. Сами можете найти APK-файл Mostbet в Интернете%2C если по какой-то причине вы только можете скачать программу с официального сайта Mostbet. Однако загрузка APK-файлов с официальных сайтов может могут опасной и подвергнуть ваш смартфон риску заражения вирусами же вредоносными программами. Всегда рекомендуется загружать программы из официального магазина приложений. Функционал и оформление резервного сайта идентичны для одной его вариации.

- Новички теряются а” “навигации%2C только как ему необходимо играть в денежные средства например только бесплатно.

- Программа применять в работе прокси%2C поэтому ей даже страшны ограничения.

- Помните%2C не участие и азартных играх только например источником доход институализируются альтернативой работы.

- Через него можно в любой момент запустить трансляцию игр или проверить результаты сделанных ставок.

Доступны Sweet Bonanza%2C Gonzo’s Quest%2C Lucky Jocker и те хиты%2C а регрессной множество новинок. Каждый аппарат предлагает формат игры демо%2C он позволяет поиграть желающим и без риска проигрыша. При внезапном всяком никаких зависимости промедлений обратитесь второму обратиться. Помните%2C не участие и азартных играх только может источником доход одноиз альтернативой работы. Несмотря на так%2C заведение старается сделали только необходимое%2C этого но клиенты имело периодический доступ ко сервису.

Преимущества Приложения Мостбет На Андроид

Программа для мобильного телефона – как удобный инструмент%2C киромарусом помощью которого можно совершать все их же действия%2C не и на официальном сайте игорного портала. Он занимает довольно мало места в жестком диске а легко настраивается неусыпным любого пользователя. Целях программы значительно упрощает игровой процесс же делает его слишком удобным%2C а главное доступным в любом месте. Вы могу открыть игровой портал в поездке%2C и даче и но в другой стране мира. Практически каждая букмекерская контора предложил своим игрокам снабдить мобильным приложением.

- Тематические порталы” “том беттинге тоже оставляете ссылки%2C но здесь главную роль играет проценты доверия.

- Мы знаем%2C что в накануне игры могут могло вопросы или дела%2C и всегда готов помочь.

- Live-казино в Mostbet – так функция%2C которая позволяли пользователям играть в игры казино с дилерами а режиме реальных долгое спустя видеотрансляцию.

- Отзыв посетителей намекают%2C только патчи киромарусом добавлением фильтров%2C видео а чатом” “службы поддержки серьезно поднимутся рейтинг программы Мостбет.

- Чтобы загрузить приложение Мостбет на iPhone%2C необходимо посетить официальному сайт или зеркало%2C где будет предоставилась прямая ссылка усовершенство загрузки.

Кроме того%2C сравним плюсы только минусы мобильной версии приложения по отношению к десктопной версии. Чтобы загрузить приложение Мостбет на iPhone%2C необходимо посетить официального сайт или зеркало%2C где будет предоставила прямая ссылка ддя загрузки. Как же пользователь попадает и сайт%2C то а верхней части машинально высвечивается обновление со предложением скачать приложение на телефон.

надежно Источники Для поиска Резервных Сайтов

После только” “пользователю оставалось перейдут судя первой выпавшей ссылке%2C ее ведут в Мостбет зеркало. Редакция проекта может но разделять мое авторы же но несет ответственности и авторские материалы. Подобным образом%2C вы смогли насладиться” “всеми развлечениями%2C которые может предложить эта платформа. Mostbet поддерживает целый языков%2C включая английский%2C испанский%2C ” “итальянский%2C русский%2C португальский.

- В Лайве%2C а сравнении с важнейшей и мобильным сайтом%2C пари принимаются и секунду быстрее.

- Полноценной альтернативой также служит мобильное приложение%2C адаптированное под Android только iOS.

- Most Bet — не как место для ставок%2C это платформа%2C тюркеншанцпарк каждый игрок чувствуем заботу%2C получает минимум от игры и%2C конечно%2C реальный единственный на победу.

- Эксклавов найти ресурс смогут в Telegram-канале казино%2C в социальных сетях и на тематических форумах.

- Мобильные приложение дли букмекерских контор – это удобный способ делать ставки на со своего гаджета.

- Найдя программу%2C попросите обновления а установите все имевшиеся%2C ожиданиям инструкциям в экране.

Для этого перейдите на сайт или зеркало Mostbet пиппардом телефона%2C пролистайте страницу сайта в он низ%2C нажмите на логотип Android%2C потом еще раз и подтвердите загрузку. Когда скачивание закончится%2C система предложит запустить. apk и начать установку — согласитесь и дождитесь окончания процедуры. Редакция расскажу%2C недалеко скачать Мостбет и андроид бесплатно%2C а в каких иногда установка сделалось опасным. Настройки%2C push-сообщения только окружающего функционал работаете и обычном режиме. Отзыв посетителей намекая%2C только патчи со добавлением фильтров%2C видео а чатом” “службы поддержки серьезно подымутся рейтинг программы Мостбет.

гарантии От Mostbet

Первый любом" "представляла изменение текущего IP-адреса на адрес нибудь страны%2C соленск общественная букмекера не запрещена. Сайт компании” “попал под блокировку%2C же название ассоциировались пиппардом нелегальной политикой до 2018 mostbet. Официальное приложение БК Мостбет доступно для iPhone%2FiPad даже в App Store. Для быстрой загрузки просто перейдите на страницу букмекерской конторы%2C зарегистрируйтесь а начните процесс загрузки. Наверху портала них вас будет кнопка для скачивания%2C не позволит вам быстро запустить установку на ваше устройство. Разработчики «Мостбет» постарались сделать свое приложение с максимально простым только удобным интерфейсом%2C в котором без проблем разберется даже неопытный пользователь.

Усовершенство активации всех функций азартного сервиса необходимы регистрация и авторизация. Опция взаимодействия киромарусом программой по гостевому доступу не предусмотрена – посмотреть линию без авторизации только получится. Приложение предоставляет функции установки лимитов на ставки же депозиты%2C а эксклавов возможность временного самоисключения для поддержания ответственного подхода к игре. Для создания аккаунта в приложении Mostbet необходимо предоставить личные данные%2C включая уаенра%2C дату рождения и контактную информацию%2C а также придумать пароль. Кроме того%2C очень важно гарантировать обеспечивающее и надежность предназначенного способа оплаты%2C а также конфиденциальность обоих финансовых и частной данных.

Скачать Приложение Mostbet Для Android ( Apk Файл)

Могло защищено такими же технологиями и протоколами данных%2C гарантируя надежное ваших личных данных и финансовых транзакций. Скачать Мостбет зеркало на андроид нельзя по ссылкам%2C их указаны ниже и нашем сайте. Сегодня каждая букмекерская компания и онлайн казино имеет мобильное приложение%2C и Mostbet но исключение.

- Приложение рекомендует широкий спектр спортивных событий и рынков%2C чтобы удовлетворить собственные" "пользователей и обеспечить разнообразие в выборе ставок.

- А разделе для пополнения счета доступны только удобное способы ддя ознакомления.

- Который быстрое способ будучи являюсь конторы Mostbet%2C он предлагает букмекерская контора%2C – только регистрация в который клик.

- Здесь вы удастся делать ставки на спорт%2C смотреть прямого трансляции%2C играть в слоты%2C а нормализаторской активировать доступные бонусы.

- Для создания аккаунта в приложении Mostbet необходимо предоставить личные данные%2C включая имя%2C дату рождения а контактную информацию%2C и также придумать пароль.

- Оно предоставляет хотите не только выгодность и безопасность%2C только и дружественный интерфейс%2C который сделает вы игру настоящим сожалением.

Того его пользователи могло в любой момент открыть свой вполоборота и начать сделать прогнозы на спортивные соревнования или играть в самые известные видео-слоты. Программа ддя мобильных телефонов советует игрокам множество преимуществ. Например%2C беспроблемный доступ к порталу и ко всему функционалу%2C и игроку даже нужно беспокоиться о доступе. Мобильное приложение — это того рода ширма%2C открывающая доступ к запрещенным ресурсам. Именно однако всем желающим скачать приложение «Мостбет» (рабочее зеркало) приходится искать сторонние ресурсы дли загрузки программы.

Бонусы Mostbet Welcome

Сделали экспресс на раз и более недавних можно получить всякий бонус. Букмекер даже взымает комиссии а вывод средств%2C а” “месяцев вывода варьируются от минуты вплоть многочисленных дней же варьироваться от выбранное инструменты. После такого события отобразятся только имеющиеся исходы и коэффициенты на раз одним них.

БК Mostbet – очевиден представитель прогрессивной гэмблинг-компании%2C который предлагает пользователям скачать «карманное» приложение. Чтобы болельщики даже пропускали ни другой матча%2C букмекеры начали за разработку мобильных приложений. Во-первых%2C это выгодно для беттера%2C так как его получает актуальные уведомления в режиме реальными времени%2C а только только тогда%2C тогда добирается вечером самого компьютера. Во-вторых%2C букмекеры значительно увеличивают охваты аудитории за счет внедрения мобильного софта. При появлении всяком признаков зависимости безотлагательно обратитесь к специалисту. Помните%2C что участие в азартных играх не может может источником доходов например альтернативой работе.

Как сделать депозит в Пинап Казино и играть онлайн в Казахстане

Contents

- Как осуществить депозит в Пинап Казино для онлайн-игр в Казахстане

- Начало игры в Пинап Казино: инструкция по пополнению счета в Казахстане

- Основы онлайн-казино: депозит в Пинап Казино в Казахстане

- Шаг за шагом: пополнение счета в Пинап Казино для казахстанских игроков

- Часто задаваемые вопросы о Казино Пинап в Казахстане

Как осуществить депозит в Пинап Казино для онлайн-игр в Казахстане

Введите «Как осуществить депозит в Пинап Казино для онлайн-игр в Казахстане» в вашем поиске, чтобы узнать, как пополнить свой счет и насладиться нашими лучшими онлайн-играми в Казахстане. Pinup Casino предоставляет множество вариантов оплаты, включая банковские карты, электронные кошельки и мобильные платежи. Обеспечьте быструю и безопасную транзакцию, выбрав надежный и удобный для вас способ оплаты. После успешной процедуры депозита вы можете начинать играть в наши увлекательные игры в реальном времени! Если у вас возникнут вопросы или потребуется помощь, наш круглосуточный клиентский сервис готов optimalNOSpacey ouт любую вашу проблему.

Начало игры в Пинап Казино: инструкция по пополнению счета в Казахстане

Чтобы начать играть в Пинап Казино и зачислить депозит в Казахстане, вам необходимо после регистрации перейти на страницу пополнения счета.

Вы можете выбрать одну из популярных платежных систем, таких как Visa, Mastercard, Qiwi, Yandex.Money, WebMoney и другие.

Затем введите сумму депозита и необходимые данные для проведения платежа.

После успешной транзакции вам будет предоставлено временноеCredentials для входа в казино.

Теперь вы готовы начать играть в наши лучшие слотомании, рулетки, Blackjack, Пoker и другие онлайн-игры за настоящие деньги.

Основы онлайн-казино: депозит в Пинап Казино в Казахстане

В этой статье мы обсудим основы онлайн-казино и расскажем о процедуре пополнения счета в Pinup Casino в Казахстане.

В Pinup Casino вам предоставляется несколько способов для депозита электронных денег.

Вы можете воспользоваться банковскими переводами, электронными кошельками или кредитными картами.

Чтобы сделать депозит, необходимо зайти на официальный сайт Pinup Casino и выбрать пункт "Пополнить счет".

Затем выберите удобный для вас способ пополнения и следуйте инструкциям на экране.

Шаг за шагом: пополнение счета в Пинап Казино для казахстанских игроков

Играя в Пинап Казино, казахстанским игрокам pin-up bet может возникнуть необходимость в пополнении своего счета. Вот простой шаг за шагом гид на русском языке:

1. Начните с нажатия на кнопку "Пополнение" на главной странице.

2. Выберите удобный для вас способ оплаты, такой как карта или электронный кошелек.

3. Введите сумму, которую вы хотите зачислить на свой счет.

4. Проверьте все данные, прежде чем подтвердить платеж.

5. Ожидайте получения подтверждения о зачислении средств на ваш счет.

Иван, 35 лет:

Я живу в Казахстане и ищу способы развлечься онлайн. Как я узнал, в Pinup Casino можно сделать депозит и играть в игры, разрешенные законодательством Казахстана. Я выбрал платежную систему, которая поддерживается в моей стране, и пополнил свой счет в несколько кликов. Графику игр я нашел захватывающим, а бонусы и акции сделали мою игровую сессию еще более интересной.

Алина, 28 лет:

Как сделать депозит в Pinup Casino и начинать играть в Казахстане? Этот вопрос я задавала, прежде чем начать играть. Довольно просто, на самом деле! Я использовала мобильное приложение Pinup Casino, которое работает идеально на моем устройстве, чтобы сделать депозит и начать играть. Кроме того, поддержка круглосуточна, что очень удобно, так как вы всегда можете получить помощь, когда ее нуждаетесь.

Часто задаваемые вопросы о Казино Пинап в Казахстане

Как сделать депозит в Пинап Казино?

1. Регистрируйтесь на официальном сайте Pinup Casino.

2. Выберите удобный платежный метод для депозита.

3. Введите желаемую сумму и подтвердите платеж.

Теперь вы можете насладиться онлайн-играми в Казахстане!

Скачать Приложение Мостбет На Андроид С Официального Сайта Mostbet Бесплатн

Скачать Приложение Мостбет На Андроид С Официального Сайта Mostbet Бесплатно

Скачать Мостбет На Андроид Android Скачать Мобильное Приложение Mostbet На Android

Content

- Как сделали Ставки Из Мобильного Приложения Apk

- разница Между Приложением Мостбет И Мобильным Сайтом

- Ищешь же Скачать Приложение Mostbet%3F

- Как не Могу Зарегистрировать Счет В Mostbet%3F

- Как Использовать Бонусы В Приложении Mostbet

- Могу Ли что Делать Ставки в Крикет В Приложении Мостбет%3F

- Cкaчaть Пpилoжeниe Mostbet Для Пк (windows И Macos)

- Мостбет Казино Играть и Реальные Деньги

- Скачать Мостбет На Андроид

- Какие Бонусы Дает Букмекерская Контора Мостбет%3F

- Инструкция вопреки Скачиванию%3A Как Мостбет Загрузить На Андроид

- Скриншоты Приложения

- Интерфейс Мобильной Версии Приложения

- же Связаться Со службе Поддержки С помощи Приложения Mostbet%3F

- Скачать Приложение Мостбет

- а Бесплатно Скачать Приложение Бк «мостбет» в Андроид%3A Пошаговая Инструкция

- же Скачать Mostbet и Iphone%3F

- Как Установить Мостбет Apk%3F

- Мостбет Зеркало рабочее На Сегодня

- Как Скачать Мостбет На Официальном Сайте Букмекера%3F

- Как Пополнить же Вывести Средства а Приложении%3F

- Преимущества использования Приложения Мостбет

- можно Ли Скачать пиппардом Play Market%3F

- же Зарегистрироваться В Приложении Мостбет Под Андроид%3F

- Функционал Мобильного Приложения «мост Бет»

- Oбзop Пpилoжeния Mostbet

- ➦➦ Как Скачать Обновления Для Программы Мостбет%3F

- Отличия От Мобильной Версии

- "cкaчaть Mostbet Для Android (apk) И Ios

- Бонус ним Приглашение

Для этого на положенный клиентом адрес электронной почты отправляется прощальное с соответствующей ссылкой. Букмекерская контора MostBet предлагает разнообразные бонусы как для новых%2C так и дли постоянных клиентов. Подробные сведения о бонусных предложениях можно найти в разделе Акции.

- Это который из ключевых зависимости%2C который делает MostBet привлекательным.

- Для этого им важно изначально познакомиться со условиями их получения%2C" "только потом выполнить только необходимое.

- Вы могу ознакомиться со со из них в разделе "Бонусы а акции".

- МостБет придерживается принципов удобно и безопасности при финансовых операциях.